实验环境

| 主机名 | IP | 备注 |

|---|---|---|

| k8s-80 | 192.168.188.80 | 2U2G、master |

| k8s-81 | 192.168.188.81 | 1U1G、node |

| k8s-82 | 192.168.188.82 | 1U1G、node |

Kubernetes 有两种方式,第一种是二进制的方式,可定制但是部署复杂容易出错;第二种是kubeadm工具安装,部署简单,不可定制化。本次我们部署 kubeadm 版。

注意:本次试验中写的yaml文件或者创建各种资源都没有指定命名空间,所以默认使用default空间;在生产上一定要指明命名空间,不然会默认使用default空间

环境准备

若无特别说明,都是所有机子都操作

1.1、关闭安全策略与防火墙

关闭防火墙是为了方便日常使用,不会给我们造成困扰。在生成环境中建议打开。

# 安全策略# 永久关闭sed -i 's#enforcing#disabled#g' /etc/sysconfig/selinux# 临时关闭setenforce 0# 防火墙systemctl disable firewalldsystemctl stop firewalldsystemctl status firewalld1.2、关闭 swap 分区

一旦触发 swap,会导致系统性能急剧下降,所以一般情况下,K8S 要求关闭swap 分区。

第一种方法:关闭swap分区swapoff -ased -ri 's/.*swap.*/#&/' /etc/fstab第二种方法:在k8s上设置忽略swap分区echo 'KUBELET_EXTRA_ARGS="--fail-swap-on=false"' > /etc/sysconfig/kubelet1.3、配置国内 yum 源

cd /etc/yum.repos.d/mkdir bakmv ./* bak/wget -O /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repowget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repoyum makecache1.4、升级内核版本

由于 Docker 运行需要较新的系统内核功能,例如 ipvs 等等,所以一般情况下,我们需要使用4.0+以上版本的系统内核。

### 载入公钥rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org### 安装ELReporpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm### 载入elrepo-kernel元数据yum --disablerepo=\* --enablerepo=elrepo-kernel repolist # 34个### 查看可用的rpm包yum --disablerepo=\* --enablerepo=elrepo-kernel list kernel*### 安装长期支持版本的kernelyum --disablerepo=\* --enablerepo=elrepo-kernel install -y kernel-lt.x86_64### 删除旧版本工具包yum remove kernel-tools-libs.x86_64 kernel-tools.x86_64 -y### 安装新版本工具包yum --disablerepo=\* --enablerepo=elrepo-kernel install -y kernel-lt-tools.x86_64### 查看默认启动顺序awk -F\' '$1=="menuentry " {print $2}' /etc/grub2.cfg CentOS Linux (4.4.183-1.el7.elrepo.x86_64) 7 (Core) CentOS Linux (3.10.0-327.10.1.el7.x86_64) 7 (Core) CentOS Linux (0-rescue-c52097a1078c403da03b8eddeac5080b) 7 (Core)#默认启动的顺序是从0开始,新内核是从头插入(目前位置在0,而4.4.4的是在1),所以需要选择0。grub2-set-default 0 #重启并检查rebootUbuntu16.04#打开 http://kernel.ubuntu.com/~kernel-ppa/mainline/ 并选择列表中选择你需要的版本(以4.16.3为例)。#接下来,根据你的系统架构下载 如下.deb 文件:Build for amd64 succeeded (see BUILD.LOG.amd64): linux-headers-4.16.3-041603_4.16.3-041603.201804190730_all.deb linux-headers-4.16.3-041603-generic_4.16.3-041603.201804190730_amd64.deb linux-image-4.16.3-041603-generic_4.16.3-041603.201804190730_amd64.deb#安装后重启即可sudo dpkg -i *.deb1.5、安装 ipvs

ipvs 是系统内核中的一个模块,其网络转发性能很高。一般情况下,我们首选ipvs。

# 安装 IPVSyum install -y conntrack-tools ipvsadm ipset conntrack libseccomp# 加载 IPVS 模块cat > /etc/sysconfig/modules/ipvs.modules <<EOF#!/bin/bashipvs_modules="ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_fo ip_vs_nq ip_vs_sed ip_vs_ftp nf_conntrack"for kernel_module in \${ipvs_modules}; do /sbin/modinfo -F filename \${kernel_module} > /dev/null 2>&1 if [ $? -eq 0 ]; then /sbin/modprobe \${kernel_module} fidoneEOF# 验证chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep ip_vs1.6、内核参数优化

内核参数优化的主要目的是使其更适合 kubernetes 的正常运行。

cat > /etc/sysctl.d/k8s.conf << EOFnet.ipv4.ip_forward = 1net.bridge.bridge-nf-call-iptables = 1net.bridge.bridge-nf-call-ip6tables = 1fs.may_detach_mounts = 1vm.overcommit_memory=1vm.panic_on_oom=0fs.inotify.max_user_watches=89100fs.file-max=52706963fs.nr_open=52706963net.ipv4.tcp_keepalive_time = 600net.ipv4.tcp.keepaliv.probes = 3net.ipv4.tcp_keepalive_intvl = 15net.ipv4.tcp.max_tw_buckets = 36000net.ipv4.tcp_tw_reuse = 1net.ipv4.tcp.max_orphans = 327680net.ipv4.tcp_orphan_retries = 3net.ipv4.tcp_syncookies = 1net.ipv4.tcp_max_syn_backlog = 16384net.ipv4.ip_conntrack_max = 65536net.ipv4.tcp_max_syn_backlog = 16384net.ipv4.top_timestamps = 0net.core.somaxconn = 16384EOF# 立即生效sysctl --system1.7、安装 Docker

主要是作为 k8s 管理的常用的容器工具之一。

# step 1: 安装必要的一些系统工具yum install -y yum-utils device-mapper-persistent-data lvm2# Step 2: 添加软件源信息yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo# Step 3sed -i 's+download.docker.com+mirrors.aliyun.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo# Step 4: 更新并安装Docker-CEyum makecache fastyum -y install docker-ce# Step 4: 开启Docker服务service docker startsystemctl enable docker# Step 5: 镜像加速mkdir -p /etc/dockertee /etc/docker/daemon.json <<-'EOF'{ "registry-mirrors": ["https://niphmo8u.mirror.aliyuncs.com"]}EOFsystemctl daemon-reloadsystemctl restart docker1.8、同步集群时间

master

[root@k8s-80 ~]# vim /etc/chrony.conf[root@k8s-80 ~]# grep -Ev "#|^$" /etc/chrony.confserver 3.centos.pool.ntp.org iburstdriftfile /var/lib/chrony/driftmakestep 1.0 3rtcsyncallow 192.168.0.0/16logdir /var/log/chronynode

vim /etc/chrony.confgrep -Ev "#|^$" /etc/chrony.confserver 192.168.188.80 iburstdriftfile /var/lib/chrony/driftmakestep 1.0 3rtcsynclogdir /var/log/chronyall

systemctl restart chronyd# 验证date1.9、映射

master

[root@k8s-80 ~]# vim /etc/hosts127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4::1 localhost localhost.localdomain localhost6 localhost6.localdomain6192.168.188.80 k8s-80192.168.188.81 k8s-81192.168.188.82 k8s-82[root@k8s-80 ~]# scp -p /etc/hosts 192.168.188.81:/etc/hosts[root@k8s-80 ~]# scp -p /etc/hosts 192.168.188.82:/etc/hosts1.10、配置 Kubernetes 源

这里配置的是阿里源,可以去https://developer.aliyun.com/mirror/kubernetes?spm=a2c6h.13651102.0.0.3e221b11KGjWvc看教程

cat <<EOF > /etc/yum.repos.d/kubernetes.repo[kubernetes]name=Kubernetesbaseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/enabled=1gpgcheck=1repo_gpgcheck=1gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpgEOF#setenforce 0#yum install -y kubelet kubeadm kubectl#systemctl enable kubelet && systemctl start kubelet# 注意# 由于官网未开放同步方式, 可能会有索引gpg检查失败的情况, 这时请用 yum install -y --nogpgcheck kubelet kubeadm kubectl 安装# 这里安装的是1.22.3版本yum makecache --nogpgcheck#kubeadm与kubectl是命令,kubelet是一个服务yum install -y kubelet-1.22.3 kubeadm-1.22.3 kubectl-1.22.3systemctl enable kubelet.service1.11、镜像拉取

因为国内无法直接拉取镜像回来,所以这里自己构建阿里云的codeup与阿里云的容器镜像服务进行构建拉取

master

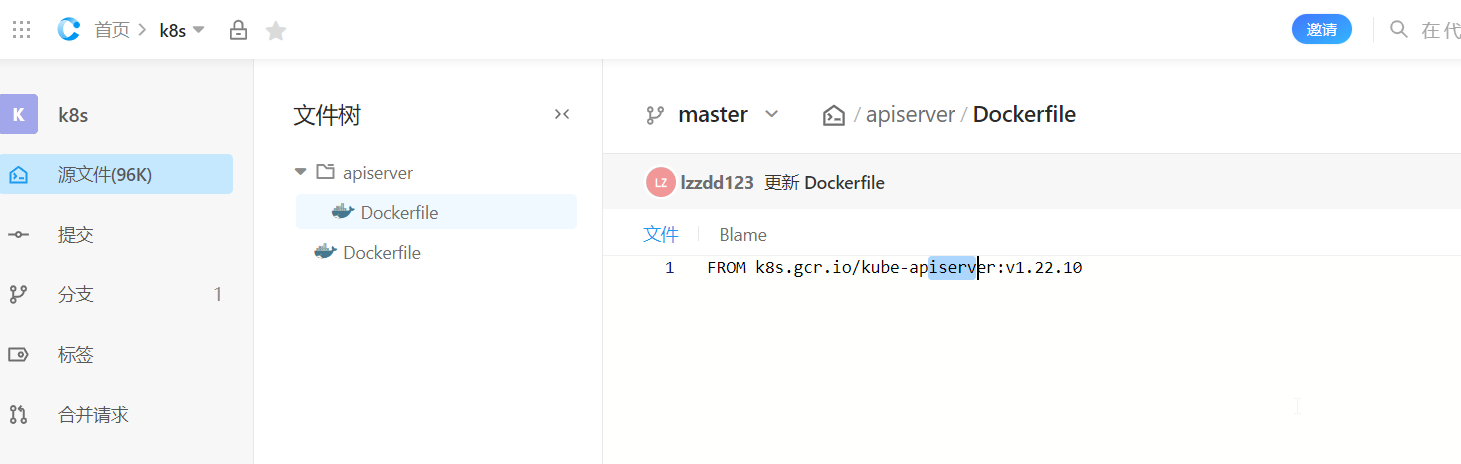

# 打印 kubeadm 将使用的镜像列表。 配置文件用于自定义任何镜像或镜像存储库的情况#大版本兼容,小版本不一致不影响[root@k8s-80 ~]# kubeadm config images listI0526 12:52:43.766362 3813 version.go:255] remote version is much newer: v1.24.1; falling back to: stable-1.22k8s.gcr.io/kube-apiserver:v1.22.10k8s.gcr.io/kube-controller-manager:v1.22.10k8s.gcr.io/kube-scheduler:v1.22.10k8s.gcr.io/kube-proxy:v1.22.10k8s.gcr.io/pause:3.5k8s.gcr.io/etcd:3.5.0-0k8s.gcr.io/coredns/coredns:v1.8.4新版代码管理https://codeup.aliyun.com/

1.在codeup新建仓库,然后新建目录,再创建dockfile文件

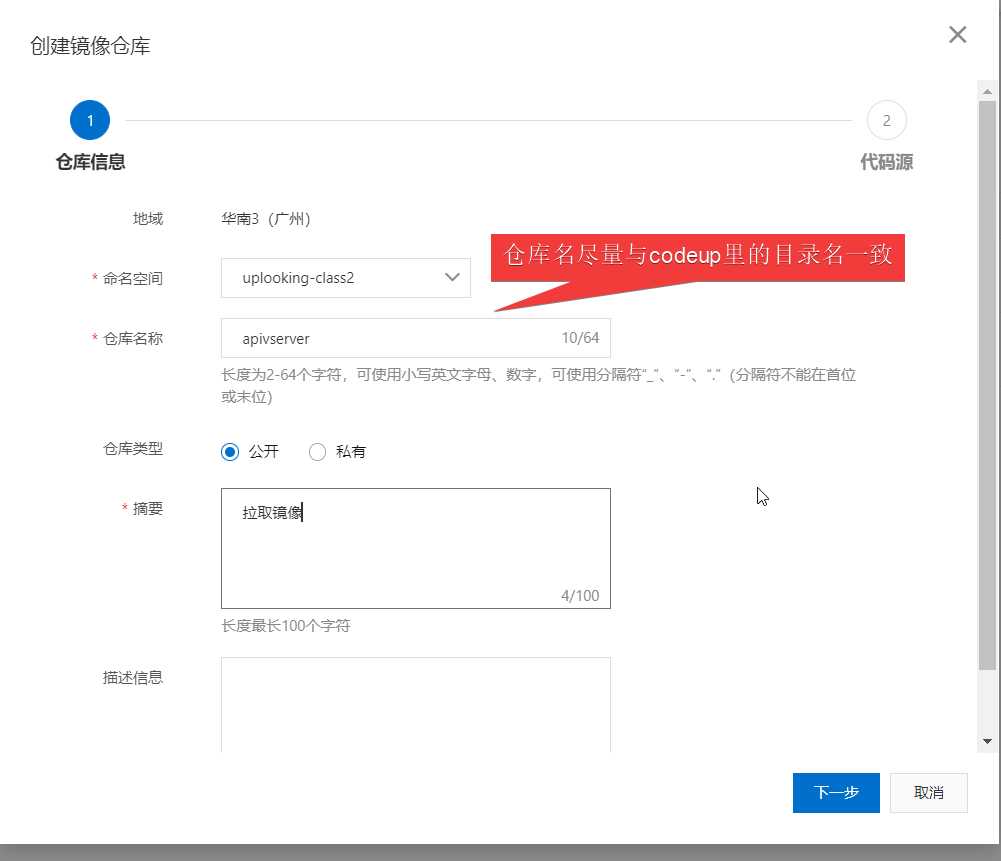

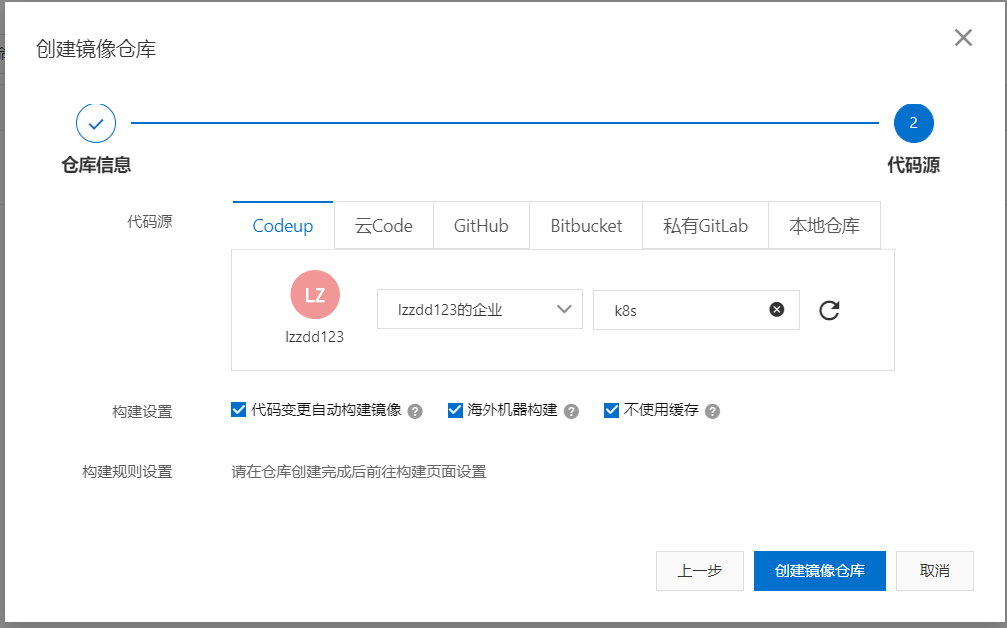

2.返回容器镜像服务,创建镜像仓库

3.绑定codeup

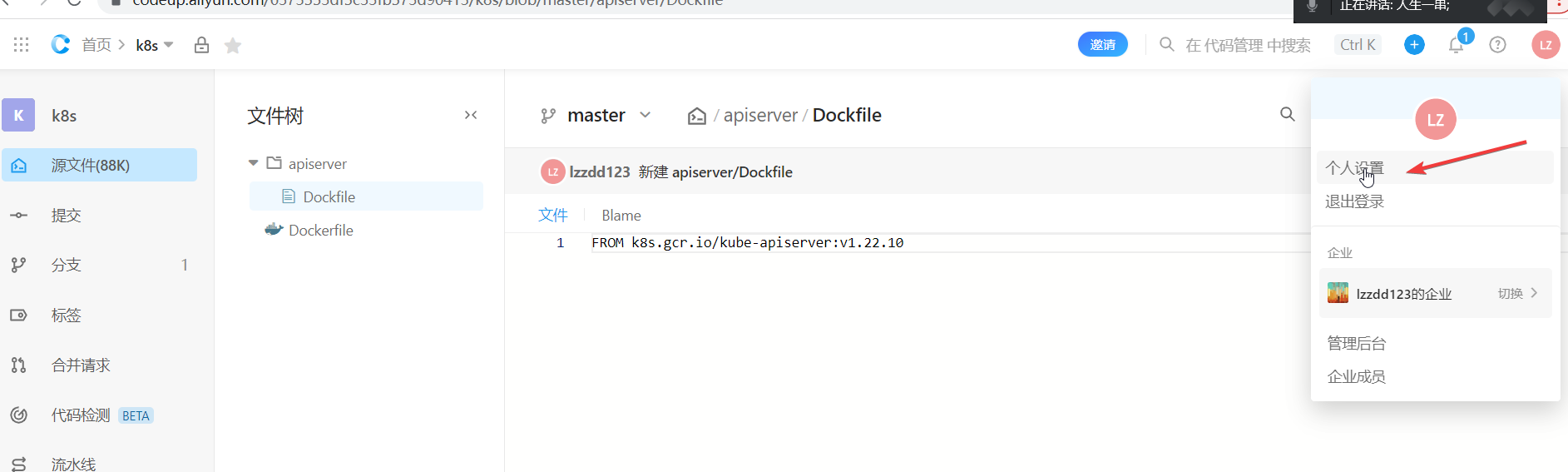

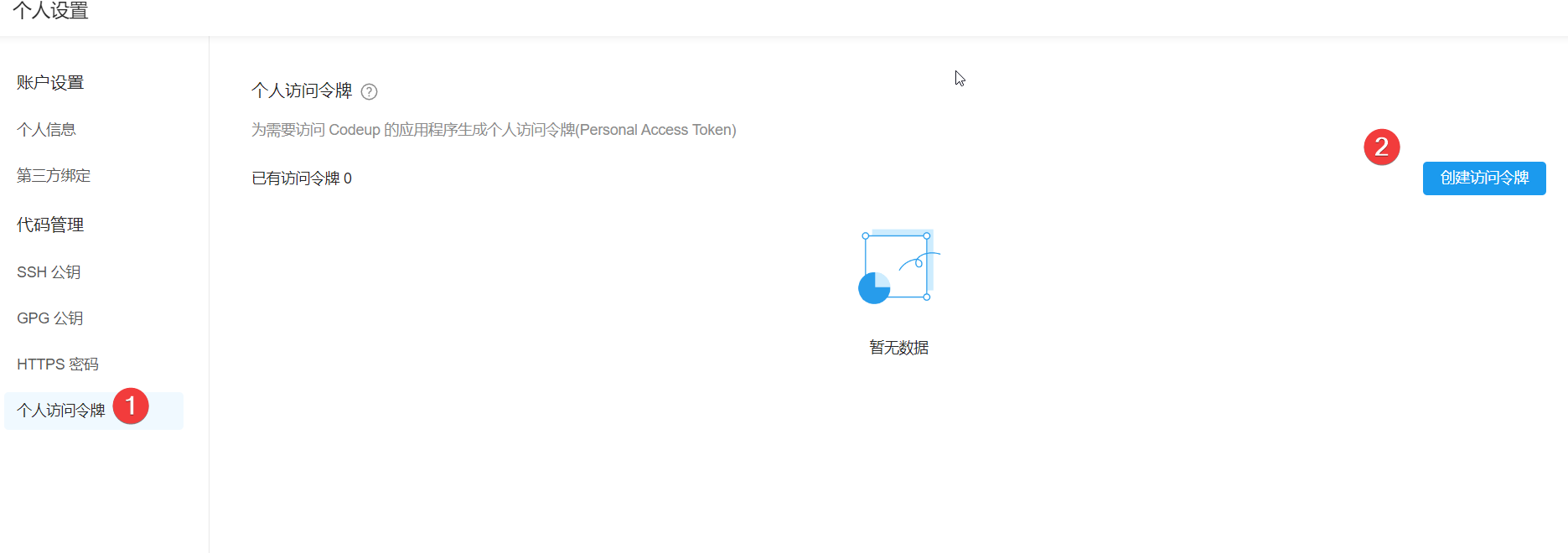

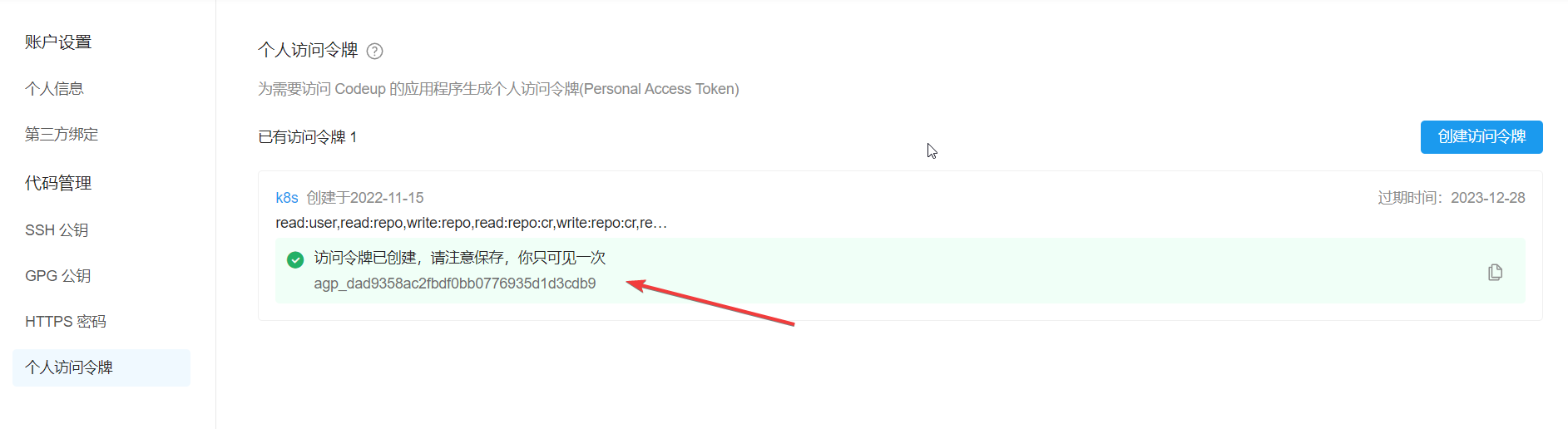

4.建立个人访问令牌

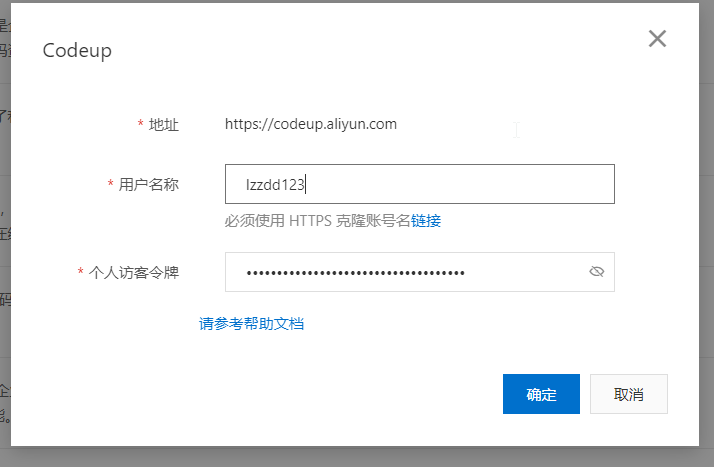

5.返回容器镜像服务,填写绑定codeup的个人访问令牌

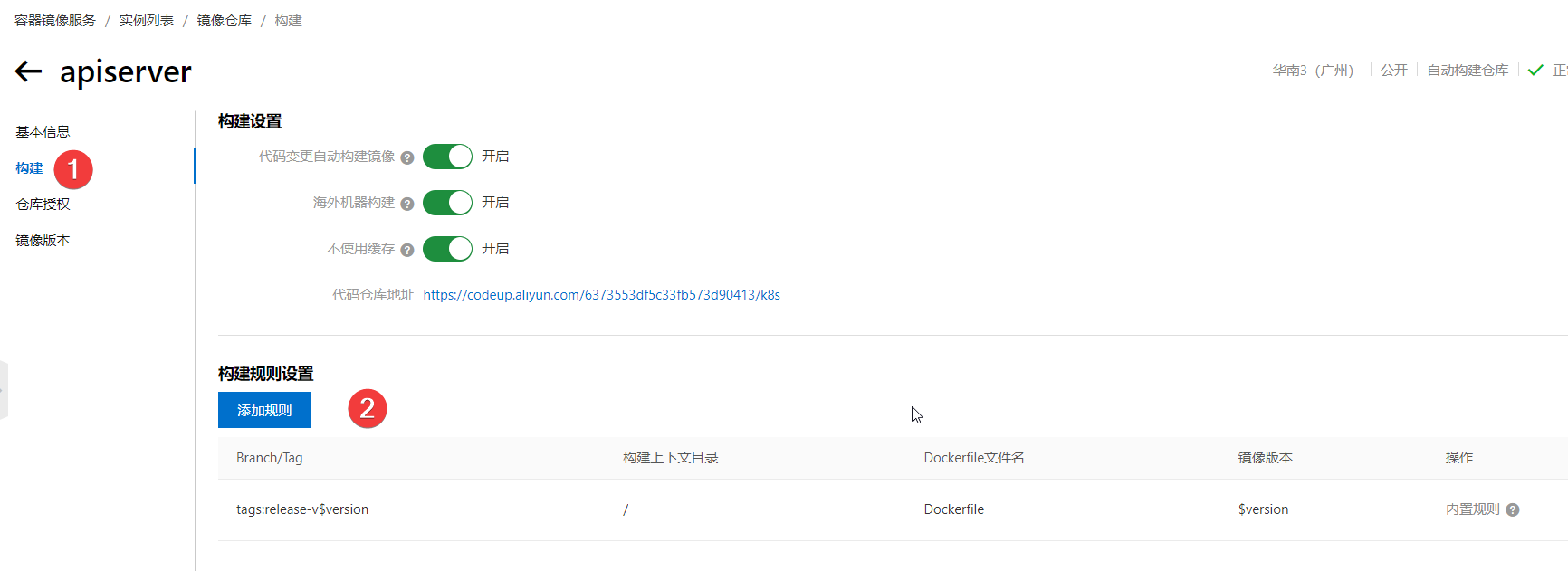

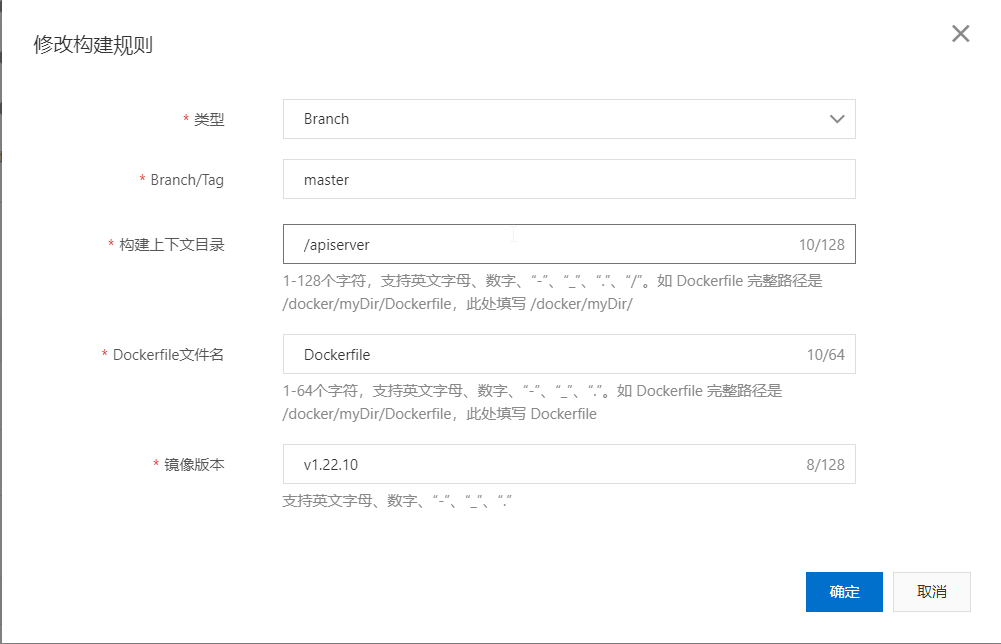

6.修改规则,要指定分支目录里的dockfile文件

7.拉取镜像

all

# 构建好拉取镜像下来docker pull registry.cn-shenzhen.aliyuncs.com/uplooking/kube-apiserver:v1.22.10docker pull registry.cn-shenzhen.aliyuncs.com/uplooking/kube-controller-manager:v1.22.10docker pull registry.cn-shenzhen.aliyuncs.com/uplooking/kube-scheduler:v1.22.10docker pull registry.cn-shenzhen.aliyuncs.com/uplooking/kube-proxy:v1.22.10docker pull registry.cn-shenzhen.aliyuncs.com/uplooking/pause:3.5docker pull registry.cn-shenzhen.aliyuncs.com/uplooking/etcd:3.5.0-0docker pull registry.cn-shenzhen.aliyuncs.com/uplooking/coredns:v1.8.4# 重新打tag,还原成查询出来的样式docker tag registry.cn-shenzhen.aliyuncs.com/uplooking/kube-apiserver:v1.22.10 k8s.gcr.io/kube-apiserver:v1.22.10docker tag registry.cn-shenzhen.aliyuncs.com/uplooking/kube-controller-manager:v1.22.10 k8s.gcr.io/kube-controller-manager:v1.22.10docker tag registry.cn-shenzhen.aliyuncs.com/uplooking/kube-scheduler:v1.22.10 k8s.gcr.io/kube-scheduler:v1.22.10docker tag registry.cn-shenzhen.aliyuncs.com/uplooking/kube-proxy:v1.22.10 k8s.gcr.io/kube-proxy:v1.22.10docker tag registry.cn-shenzhen.aliyuncs.com/uplooking/pause:3.5 k8s.gcr.io/pause:3.5docker tag registry.cn-shenzhen.aliyuncs.com/uplooking/etcd:3.5.0-0 k8s.gcr.io/etcd:3.5.0-0docker tag registry.cn-shenzhen.aliyuncs.com/uplooking/coredns:v1.8.4 k8s.gcr.io/coredns/coredns:v1.8.4docker pull registry.cn-guangzhou.aliyuncs.com/testbydocker/apiserver:v1.22.10docker pull registry.cn-guangzhou.aliyuncs.com/testbydocker/controller:v1.22.10docker pull registry.cn-guangzhou.aliyuncs.com/testbydocker/etcd:v3.5.0docker pull registry.cn-guangzhou.aliyuncs.com/testbydocker/pause:v3.5docker pull registry.cn-guangzhou.aliyuncs.com/testbydocker/proxy:v1.22.10docker pull registry.cn-guangzhou.aliyuncs.com/testbydocker/scheduler:v1.22.10docker pull registry.cn-guangzhou.aliyuncs.com/testbydocker/coredns:v1.8.4docker pull registry.cn-guangzhou.aliyuncs.com/testbydocker/apiserver:v1.22.10docker pull registry.cn-guangzhou.aliyuncs.com/testbydocker/controller:v1.22.10docker pull registry.cn-guangzhou.aliyuncs.com/testbydocker/etcd:v3.5.0docker pull registry.cn-guangzhou.aliyuncs.com/testbydocker/pause:v3.5docker pull registry.cn-guangzhou.aliyuncs.com/testbydocker/proxy:v1.22.10docker pull registry.cn-guangzhou.aliyuncs.com/testbydocker/scheduler:v1.22.10docker pull registry.cn-guangzhou.aliyuncs.com/testbydocker/coredns:v1.8.41.12、节点初始化

master

[root@k8s-master ~]# kubeadm init --help[root@k8s-80 ~]# kubeadm init --service-cidr=10.96.0.0/12 --pod-network-cidr=10.244.0.0/16 --apiserver-advertise-address=192.168.188.80注:pod网段要与flannel网段一致在安装kubernetes的过程中,会出现

[root@k8s-80 ~]# tail -100 /var/log/messages # 查看日志可以看到failed to create kubelet: misconfiguration: kubelet cgroup driver: "cgroupfs" is different from docker cgroup driver: "systemd"文件驱动默认由systemd改成cgroupfs, 而我们安装的docker使用的文件驱动是systemd, 造成不一致, 导致镜像无法启动

[root@k8s-master ~]# docker info |grep cgroup#查看驱动Cgroup Driver: cgroupfs现在有两种方式, 一种是修改docker, 另一种是修改kubelet;这里采用第一种,第二种请看这篇文章https://www.cnblogs.com/hongdada/p/9771857.html

[root@k8s-80 ~]# vim /etc/docker/daemon.json { "exec-opts": ["native.cgroupdriver=systemd"], # 添加这配置 "registry-mirrors": ["https://niphmo8u.mirror.aliyuncs.com"]}systemctl daemon-reloadsystemctl restart docker# 删除起初初始化产生的文件,初始化提示里面会有rm -rf XXX# 然后再执行这个清除命令kubeadm reset# 重新初始化[root@k8s-80 ~]# kubeadm init --pod-network-cidr=10.244.0.0/16 --apiserver-advertise-address=192.168.188.80# 初始化完毕在最后有两个步骤提示,分别是在master创建目录和一条24h时效的token,需要在规定时间内使用添加节点mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/config# get nodes 命令就提供了 Kubernetes 的状态、角色和版本# kubectl get no 或者 kubectl get nodes[root@k8s-80 ~]# kubectl get noNAME STATUS ROLES AGE VERSIONk8s-80 NotReady control-plane,master 6m9s v1.22.3node

[root@k8s02 ~]#kubeadm join 192.168.188.80:6443 --token cp36la.obg1332jj7wl11az \--discovery-token-ca-cert-hash sha256:ee5053647a18fc69b59b648c7e3f7a8f039d5553531d627793242d193879e0baThis node has joined the cluster ## 当失效的时候可以使用以下命令重新生成# 新令牌kubeadm token create --print-join-command我的numberkubeadm join 192.168.91.133:6443 --token 3do9zb.7unh9enw8gv7j4za \--discovery-token-ca-cert-hash sha256:b75f52f8e2ab753c1d18b73073e74393c72a3f8dc64e934765b93a38e7389385master

[root@k8s-80 ~]# kubectl get noNAME STATUS ROLES AGE VERSIONk8s-80 NotReady control-plane,master 6m55s v1.22.3k8s-81 NotReady <none> 18s v1.22.3k8s-82 NotReady <none> 8s v1.22.3# 每个 get 命令都可以使用 –namespace 或 -n 参数指定对应的命名空间。这点对于查看 kube-system 中的 Pods 会非常有用,因为这些 Pods 是 Kubernetes 自身运行所需的服务。[root@k8s-80 ~]# kubectl get po -n kube-system # 此时有几个服务是无法使用,因为缺少网络插件[root@k8s-master ~]# kubectl get po -n kube-systemNAME READY STATUS RESTARTS AGEcoredns-78fcd69978-n6mfw 0/1 Pending 0 164mcoredns-78fcd69978-xshwb 0/1 Pending 0 164metcd-k8s-master 1/1 Running 0 165mkube-apiserver-k8s-master 1/1 Running 0 165mkube-controller-manager-k8s-master 1/1 Running 1 165mkube-proxy-g7z79 1/1 Running 0 144mkube-proxy-wl4ct 1/1 Running 0 145mkube-proxy-x59w9 1/1 Running 0 164mkube-scheduler-k8s-master 1/1 Running 1 165m1.13、安装网络插件

关于flannel详细介绍

kubernetes 需要使用第三方的网络插件来实现 kubernetes 的网络功能,这样一来,安装网络插件成为必要前提;第三方网络插件有多种,常用的有 flannel、calico 和 cannel(flannel+calico),不同的网络组件,都提供基本的网络功能,为各个 Node 节点提供 IP 网络等。

kubernetes 设计了网络模型,但却将它的实现交给了网络插件,CNI 网络插件最主要的功能就是实现POD资源能够跨主机进行通讯。常见的 CNI 网络插件: 1. Flannel 2. Calico 3. Canal 4. Contiv 5. OpenContrail 6. NSX-T 7. Kube-router

这里使用flannel,可以来这里保存这个yml文件上传到服务器https://raw.githubusercontent.com/flannel-io/flannel/master/Documentation/kube-flannel.yml

master

[root@k8s-80 ~]# lsanaconda-ks.cfg flannel.yml# 某些命令需要配置文件,而 apply 命令可以在集群内调整配置文件应用于资源。虽然也可以通过命令行 standard in (STNIN) 来完成,但 apply 命令更好一些,因为它可以让你知道如何使用集群,以及要应用哪种配置文件。# 可以应用几乎任何配置,但是一定要明确所要应用的配置,否则可能会引发意料之外的后果。[root@k8s-80 ~]# kubectl apply -f flannel.yml[root@k8s-master ~]# cat kube-flannel.yaml |grep Network "Network": "10.244.0.0/16", hostNetwork: true[root@k8s-master ~]# cat kube-flannel.yaml |grep -w image |grep -v "#" image: docker.io/rancher/mirrored-flannelcni-flannel-cni-plugin:v1.1.0 image: docker.io/rancher/mirrored-flannelcni-flannel:v0.20.1 image: docker.io/rancher/mirrored-flannelcni-flannel:v0.20.1当拉不下来镜像的时候可以从阿里云自己搭建的镜像仓库中的构建进行拉取,包括前面也是采用这个方法拉取的镜像

all

# 拉取docker pull registry.cn-shenzhen.aliyuncs.com/uplooking/mirrored-flannelcni-flannel:v0.17.0docker pull registry.cn-shenzhen.aliyuncs.com/uplooking/mirrored-flannelcni-flannel-cni-plugin:v1.0.1# 打标签 docker tag registry.cn-shenzhen.aliyuncs.com/uplooking/mirrored-flannelcni-flannel:v0.17.0 rancher/mirrored-flannelcni-flannel:v0.17.0docker tag registry.cn-shenzhen.aliyuncs.com/uplooking/mirrored-flannelcni-flannel-cni-plugin:v1.0.1 rancher/mirrored-flannelcni-flannel-cni-plugin:v1.0.1master

[root@k8s-80 ~]# kubectl get no # 检查状态,此时全为Ready证明集群初步完成且正常NAME STATUS ROLES AGE VERSIONk8s-80 Ready control-plane,master 47m v1.22.3k8s-81 Ready <none> 41m v1.22.3k8s-82 Ready <none> 40m v1.22.31.14、kube-proxy开启ipvs

kubernetes 需要使用第三方的网络插件来实现 kubernetes 的网络功能,这样一来,安装网络插件成为必要前提;第三方网络插件有多种,常用的有 flanneld、calico 和 cannel(flanneld+calico),不同的网络组件,都提供基本的网络功能,为各个 Node 节点提供 IP 网络等。默认使用iptables。

当创建好资源后,如果需要修改,该怎么办?这时候就需要 kubectl edit 命令了。

可以用这个命令编辑集群中的任何资源。它会打开默认文本编辑器。

master

# 更改kube-proxy配置[root@k8s-80 ~]# kubectl edit configmap kube-proxy -n kube-system找到如下部分的内容 minSyncPeriod: 0s scheduler: "" syncPeriod: 30s kind: KubeProxyConfiguration metricsBindAddress: 127.0.0.1:10249 mode: "ipvs" # 加上这个 nodePortAddresses: null其中mode原来是空,默认为iptables模式,改为ipvsscheduler默认是空,默认负载均衡算法为轮训 编辑完,保存退出3、删除所有kube-proxy的podkubectl delete pod xxx -n kube-system# kubectl delete po `kubectl get po -n kube-system | grep proxy | awk '{print $1}'` -n kube-system4、查看kube-proxy的pod日志kubectl logs kube-proxy-xxx -n kube-system.有.....Using ipvs Proxier......即可.或者ipvsadm -l# 删除对应kube-proxy的pod重新生成# 删除指定命名空间内的kube-proxy的pod# kubectl delete ns xxxx 删除整个命名空间[root@k8s-80 ~]# kubectl get po -n kube-systemNAME READY STATUS RESTARTS AGEcoredns-78fcd69978-d8cv5 1/1 Running 0 6m43scoredns-78fcd69978-qp7f6 1/1 Running 0 6m43setcd-k8s-80 1/1 Running 0 6m57skube-apiserver-k8s-80 1/1 Running 0 6m59skube-controller-manager-k8s-80 1/1 Running 0 6m58skube-flannel-ds-88kmk 1/1 Running 0 2m58skube-flannel-ds-wfvst 1/1 Running 0 2m58skube-flannel-ds-wq2vz 1/1 Running 0 2m58skube-proxy-4fpm9 1/1 Running 0 6m28skube-proxy-hhb5s 1/1 Running 0 6m25skube-proxy-jr5kl 1/1 Running 0 6m43skube-scheduler-k8s-80 1/1 Running 0 6m57s[root@k8s-80 ~]# kubectl delete pod kube-proxy-4fpm9 -n kube-systempod "kube-proxy-4fpm9" deleted[root@k8s-80 ~]# kubectl delete pod kube-proxy-hhb5s -n kube-systempod "kube-proxy-hhb5s" deleted[root@k8s-80 ~]# kubectl delete pod kube-proxy-jr5kl -n kube-systempod "kube-proxy-jr5kl" deleted# 检查集群状态[root@k8s-80 ~]# kubectl get po -n kube-system # 此时已经重新生成kube-proxy的pod# 检查ipvs[root@k8s-80 ~]# ipvsadm -lkubectl get

使用 get 命令可以获取当前集群中可用的资源列表,包括:

- Namespace

- Pod

- Node

- Deployment

- Service

- ReplicaSet

每个 get 命令都可以使用 –namespace 或 -n 参数指定对应的命名空间。这点对于查看 kube-system 中的 Pods 会非常有用,因为这些 Pods 是 Kubernetes 自身运行所需的服务。

[root@k8s-80 ~]# kubectl get ns # 查看有什么命名空间NAME STATUS AGEdefault Active 23h # 不用加-n 都能进的,默认空间kube-node-lease Active 23h # 监控相关的空间kube-public Active 23h # 公用空间kube-system Active 23h # 系统空间 # 创建一个新的空间[root@k8s-80 ~]# kubectl create ns devnamespace/dev created[root@k8s-80 ~]# kubectl get nsNAME STATUS AGEdefault Active 23hdev Active 1skube-node-lease Active 23hkube-public Active 23hkube-system Active 23h# 不建议随便删除空间,会把所有资源都删除,但是可以通过etcd找回# 查看指定名称空间的service信息# 不带命名空间就是默认default空间[root@k8s-80 ~]# kubectl get svc -n kube-systemNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 23h# 使用 kubectl get endpoints 命令来验证 DNS 的端点是否公开,解析的是k8s内部的# 这些ip是虚拟的,在宿主机ip a或者 ifconfig查看没有[root@k8s-80 ~]# kubectl get ep -n kube-systemNAME ENDPOINTS AGEkube-dns 10.244.2.8:53,10.244.2.9:53,10.244.2.8:53 + 3 more... 23h# 获取指定名称空间中的指定pod[root@k8s-80 ~]# kubectl get po -n kube-systemNAME READY STATUS RESTARTS AGEcoredns-78fcd69978-f9pcw 1/1 Running 3 (12h ago) 24hcoredns-78fcd69978-hprbh 1/1 Running 3 (12h ago) 24hetcd-k8s-80 1/1 Running 4 (12h ago) 24hkube-apiserver-k8s-80 1/1 Running 4 (12h ago) 24hkube-controller-manager-k8s-80 1/1 Running 6 (98m ago) 24hkube-flannel-ds-28w79 1/1 Running 4 (12h ago) 24hkube-flannel-ds-bsw2t 1/1 Running 2 (12h ago) 24hkube-flannel-ds-rj57q 1/1 Running 3 (12h ago) 24hkube-proxy-d8hs2 1/1 Running 0 11hkube-proxy-gms7v 1/1 Running 0 11hkube-proxy-phbnk 1/1 Running 0 11hkube-scheduler-k8s-80 1/1 Running 6 (98m ago) 24h创建pod

Kubernetes 中内建了很多 controller(控制器),这些相当于一个状态机,用来控制 Pod 的具体状态和行为。

Deployment 为 Pod 和 ReplicaSet 提供了一个声明式定义(declarative)方法,用来替代以前的 ReplicationController 来方便的管理应用。典型的应用场景包括:

- 定义 Deployment 来创建 Pod 和 ReplicaSet

- 滚动升级和回滚应用

- 扩容和缩容

- 暂停和继续 Deployment

1.1 yml文件建立pod

前面我们已经使用过了yml文件,这里还是要提醒一下注意缩进问题!!!

1.1.1 pod资源清单详解

apiVersion:v1#必选,api的版本号kind:Deployment#必选,pod的类型metadata:#必选,元数据name:nginx#必选,pod的名字namespace:nginx#可选,可指定pod所在的命名空间,不选默认为default命名空间labels:#可选,不过一般写上。标签,是用来联系上下文服务的-app:nginxannotations:#可选,注释列表-app:nginxspec:#必选,pod的详细属性replicas:3#必选,生成的副本数,即生成3个podselector:#副本选择器matchLabels:#匹配标签,匹配的就是上面我们写的标签app:nginxtemplate:#匹配模板,也是上面我们写的元数据matadata:labels:app:nginxspec:#必选,容器的详细属性containers:#必选,容器列表-name:nginx#容器名字image:nginx:1.20.2#容器所用的镜像版本ports:containerPort:80#开放容器的80端口 写一个简单的pod

[root@k8s-80 ~]# mkdir /k8syaml[root@k8s-80 ~]# cd /k8syaml[root@k8s-80 k8syaml]# vim nginx.yaml # 来源于官网apiVersion: apps/v1kind: Deploymentmetadata: name: nginx labels: app: nginxspec: replicas: 3 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: nginx:1.20.2 ports: - containerPort: 80# 应用资源,创建并运行;create也能创建但是不运行[root@k8s-80 k8syaml]# kubectl apply -f nginx.yaml[root@k8s-80 k8syaml]# kubectl get po # 默认空间里面创建podNAME READY STATUS RESTARTS AGEnginx-deployment-66b6c48dd5-hpxc6 0/1 ContainerCreating 0 18snginx-deployment-66b6c48dd5-nslqj 0/1 ContainerCreating 0 18snginx-deployment-66b6c48dd5-vwxlp 0/1 ContainerCreating 0 18s[root@k8s-80 k8syaml]# kubectl describe po nginx-deployment-66b6c48dd5-hpxc6 # 把某个pod的详细信息输出# 访问的方式# 进入pod启动的容器# kubectl exec -it podName -n nsName /bin/sh #进入容器# kubectl exec -it podName -n nsName /bin/bash #进入容器[root@k8s-80 k8syaml]# kubectl exec -it nginx-deployment-66b6c48dd5-hpxc6 -- bash# 列出可用的API版本[root@k8s-80 k8syaml]# kubectl api-versions # 可以找到对应在nginx.yaml中apiVersion[root@k8s-80 k8syaml]# kubectl get po # 查看空间里pod状态NAME READY STATUS RESTARTS AGEnginx-cc4b758d6-rrtcc 1/1 Running 0 2m14snginx-cc4b758d6-vmrw5 1/1 Running 0 2m14snginx-cc4b758d6-w84qb 1/1 Running 0 2m14s# 查看某一个pod的具体情况[root@k8s-80 k8syaml]# kubectl describe po nginx-deployment-66b6c48dd5-b82ck# 所有节点信息都输出[root@k8s-80 k8syaml]# kubectl describe node # 一个节点建议pods不超出40个,20~30个最好(阿里建议)定义 Service

Service 在 Kubernetes 中是一个 REST 对象,和 Pod 类似。 像所有的 REST 对象一样,Service 定义可以基于 POST 方式,请求 API server 创建新的实例。 Service 对象的名称必须是合法的 RFC 1035 标签名称.。

由前面的service理论我们可以知道,我们有时候是需要外部来访问我们的pod的,那么这时候就要需要service来帮助。 service是一个抽象资源,它相当于附着在pod上面的代理层或者负载层,当我们访问代理层就访问到pod。常用的类型为ClusterIP与NodePort

Kubernetes ServiceTypes 允许指定你所需要的 Service 类型,默认是 ClusterIP。

Type 的取值以及行为如下:

ClusterIP:通过集群的内部 IP 暴露服务,选择该值时服务只能够在集群内部访问。 这也是默认的ServiceType。NodePort:通过每个节点上的 IP 和静态端口(NodePort)暴露服务。NodePort服务会路由到自动创建的ClusterIP服务。 通过请求<节点 IP>:<节点端口>,你可以从集群的外部访问一个NodePort服务。LoadBalancer:使用云提供商的负载均衡器向外部暴露服务。 外部负载均衡器可以将流量路由到自动创建的NodePort服务和ClusterIP服务上。ExternalName:通过返回CNAME和对应值,可以将服务映射到externalName字段的内容(例如,foo.bar.example.com)。 无需创建任何类型代理。

其中,NodePort 类型 Kubernetes 控制平面将在 --service-node-port-range 标志指定的范围内分配端口(默认值:30000-32767);在生产上不是很建议,服务少的情况可以使用,但是当服务多的时候局限性就呈现出来,适合测试的时候使用

2.1 service的yml文件清单详解

apiVersion:v1#必选,api的版本号kind:Service#必选,类型为Servicemetadata:#必选,元数据name:nginx#必选,service的名字,一般与服务名一样namespace:nginx#可选,可指定service所在的命名空间,不选默认为default命名空间spec:#必选,详细属性type:#可选,service的类型,默认为ClusterIPselector:#副本选择器app:nginxports:#必选,端口的详细信息-port:80#svc暴露的端口targetPort:80#映射pod暴露的端口nodePort:30005#可选,范围为:30000-327672.2 ClusterIP

ClusterIP是集群模式,只能集群内部访问,是k8s默认的服务类型,外部是无法访问的。其主要用于为集群内 Pod 访问时,提供的固定访问地址,默认是自动分配地址,可使用 ClusterIP 关键字指定固定 IP。

2.2.1 Cluster的yml文件

在这里,我们一般会把所有资源写在一个yml文件中,所以我们在nginx.yml文件中继续写,但是记住两种资源中间加—

cd/opt/k8svimnginx.ymlapiVersion:apps/v1kind:Deploymentmetadata:name:nginxlabels:app:nginxspec:replicas:3selector:matchLabels:app:nginxtemplate:metadata:labels:app:nginxspec:containers:-name:nginximage:nginx:1.20.1ports:-containerPort:80---apiVersion:v1kind:Servicemetadata:name:nginxspec:selector:app:nginxports:-name:httpprotocol:TCPport:80targetPort:802.2.2 运行并查看 IP

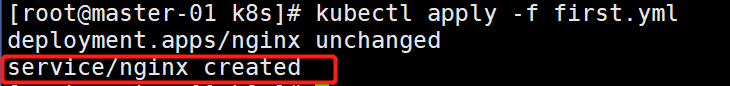

注意,apply有更新的作用,不用删除再启动

kubectl apply -f nginx.yml

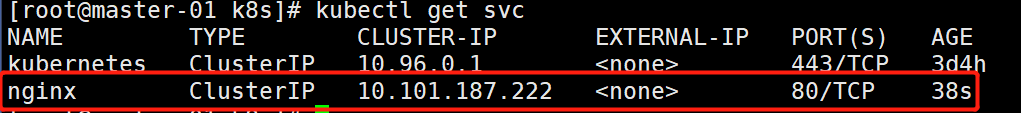

kubectl get svc

我们可以看到已经多了一个IP

2.2.3 验证

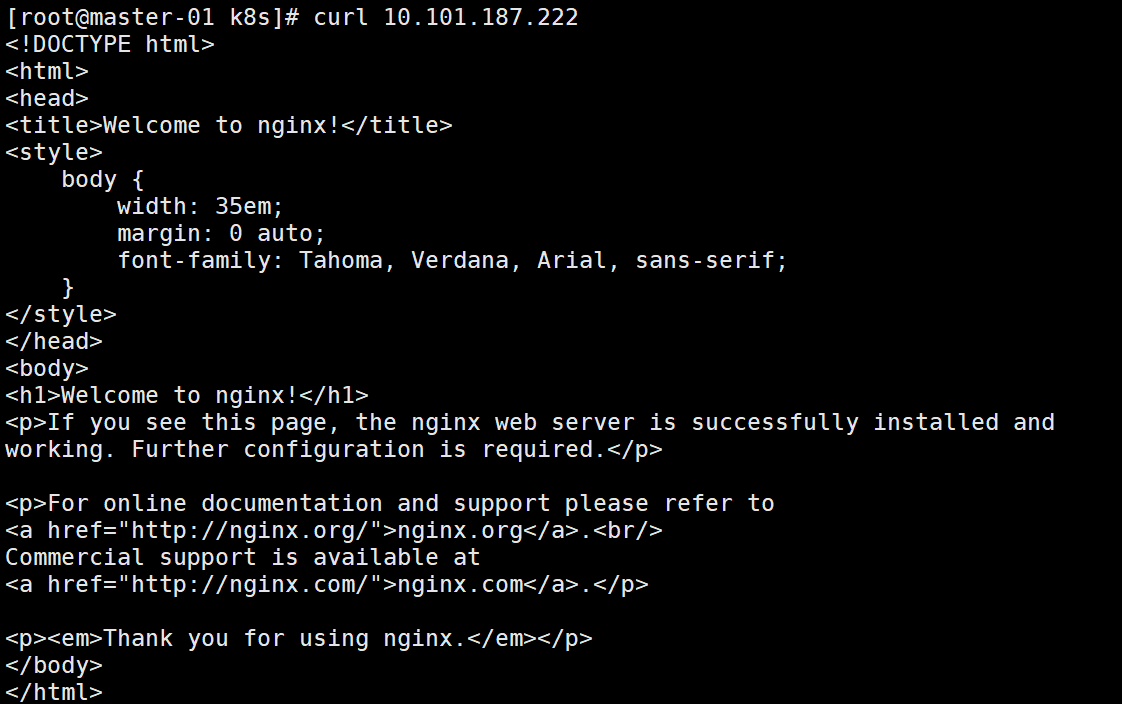

(1)这时我们集群内部访问这个ip,发现可以访问。

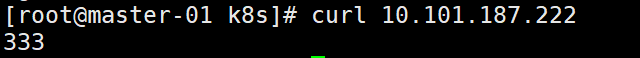

curl 10.101.187.222

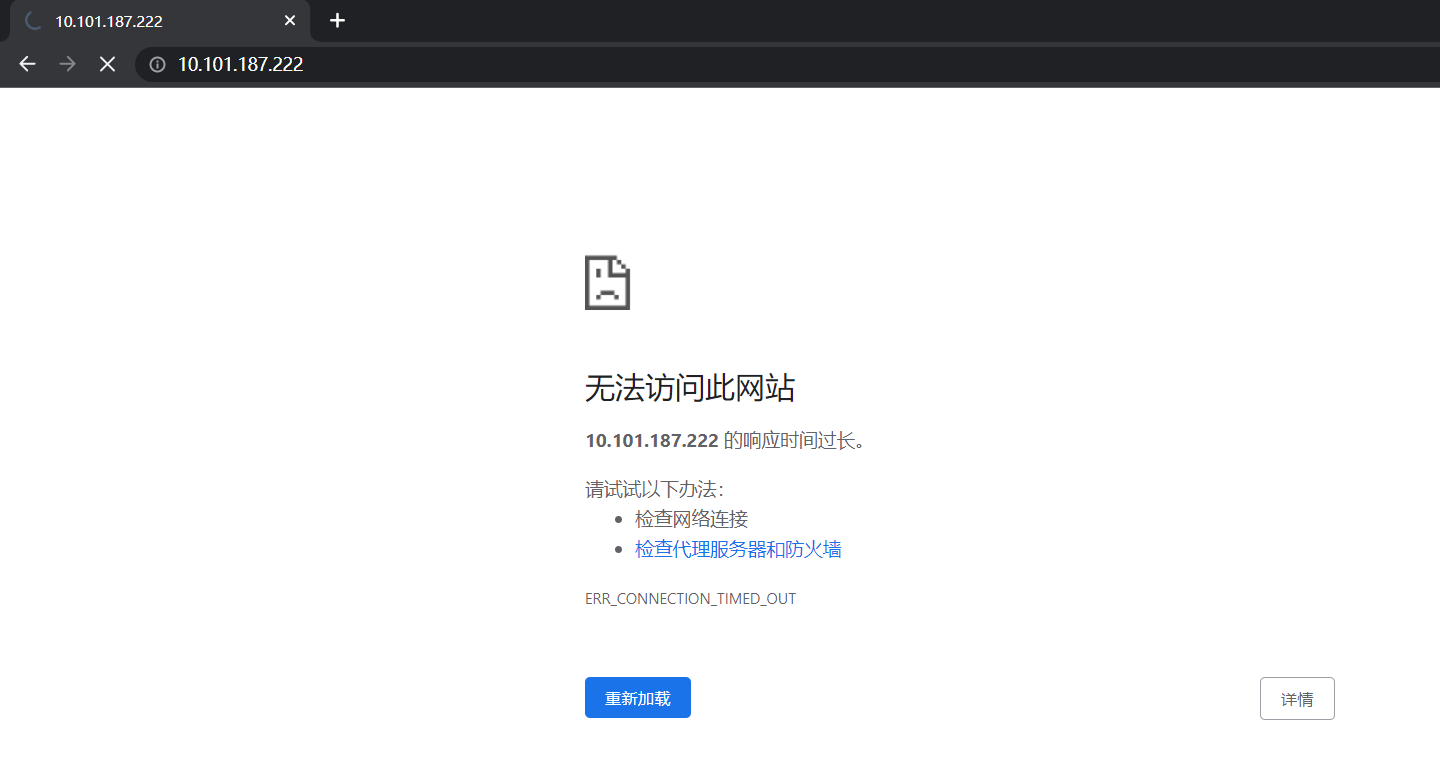

(2)这时,我们在外部访问一下,发现无法访问。

(3)综上所述,符合ClusterIP的特性,内部可访问,外部不可访问。

2.3 NodePort 类型

通过每个节点上的 IP 和静态端口(NodePort)暴露服务。这里需要注意的是,当我们在节点开了这个端口后,那么在么一个节点上都可以访问到这个IP端口,那么就可以访问到服务。这种类型的缺点是端口只有2768个,当服务比端口多的时候,这种类型就不行了。

2.3.1 Noteport的yml文件

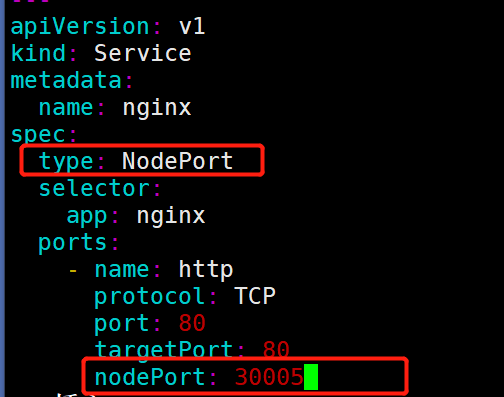

只是在ClusterIP类型的yml文件上多了type和nodePort,注意范围。

cd/opt/k8svimnginx.ymlapiVersion:apps/v1kind:Deploymentmetadata:name:nginxlabels:app:nginxspec:replicas:3selector:matchLabels:app:nginxtemplate:metadata:labels:app:nginxspec:containers:-name:nginximage:nginx:1.20.1ports:-containerPort:80---apiVersion:v1kind:Servicemetadata:name:nginxspec:type:NodePortselector:app:nginxports:-name:httpprotocol:TCPport:80targetPort:80nodePort:30005

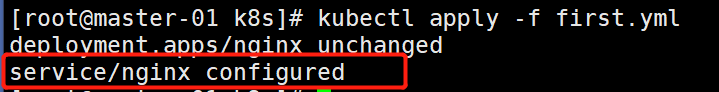

2.3.2 运行并查看 IP

kubectl apply -f nginx.yml

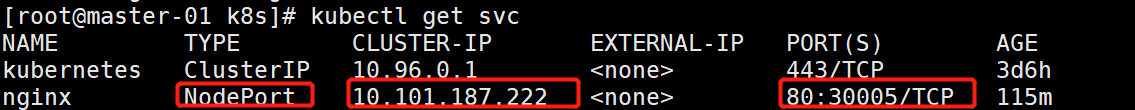

kubectl get svc

我们可以看到类型变成了NodePort,端口变成80:30005

2.3.3 验证

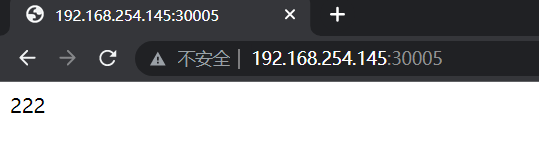

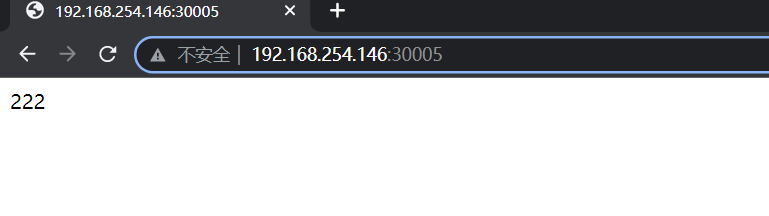

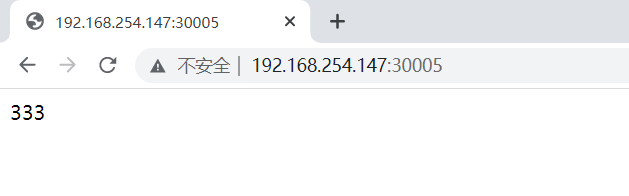

(1)这时我们集群内部访问这个ip,发现可以访问。

curl 10.101.187.222

(2)这时,重要的是外网是否能访问,我们在外部访问一下,注意外部访问是访问我们的宿主机IP:30005(是service的IP是虚拟的,是k8s给的这个IP,所以在内部是可以访问到,但是外部是不可访问到的),发现可以访问。

#浏览器输入三台宿主机的ip加30005此时能正常访问,但是因为是4层代理有会话保持,所以轮询效果比较难看到

(3)综上所述,符合NodePort的特性,内部可访问,外部也可访问。

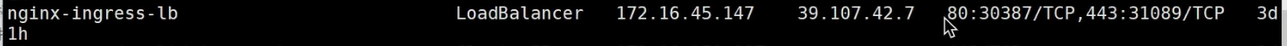

2.4 LoadBalancer

使用云提供商的负载均衡器向外部暴露服务,这种方式是将ing直接绑定到slb上。

2.4.1 LoadBalancer的yml文件

cd/opt/k8svimnginx.ymlapiVersion:apps/v1kind:Deploymentmetadata:name:nginxlabels:app:nginxspec:replicas:3selector:matchLabels:app:nginxtemplate:metadata:labels:app:nginxspec:containers:-name:nginximage:nginx:1.20.1ports:-containerPort:80---apiVersion:v1kind:Servicemetadata:name:nginxspec:type:LoadBalancerselector:app:nginxports:-port:80targetPort:802.4.2 查看IP

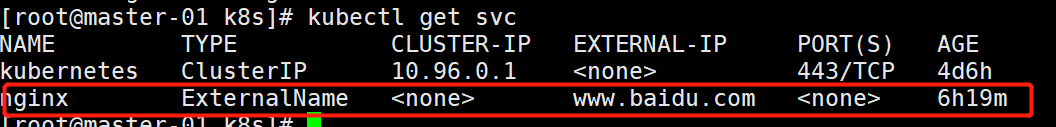

2.5 ExternalName

ExternalName Service 是 Service 的一个特例,它没有选择器,也没有定义任何端口或 Endpoints。它的作用是返回集群外 Service 的外部别名。它将外部地址经过集群内部的再一次封装(实际上就是集群 DNS 服务器将 CNAME解析到了外部地址上),实现了集群内部访问即可。

例如你们公司的镜像仓库,最开始是用 ip 访问,等到后面域名下来了再使用域名访问。你不可能去修改每处的引用。但是可以创建一个 ExternalName,首先指向到 ip,等后面再指向到域名。

2.5.1 ExternalName的yml文件

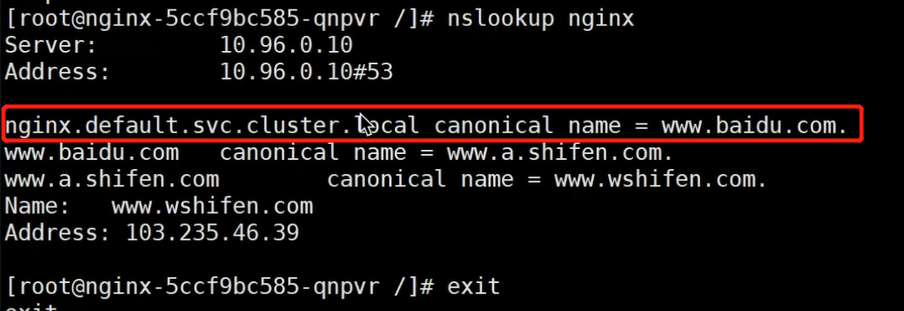

这里,我们验证一下,在容器内部,访问nginx,看下会不会跳转到百度上

apiVersion:apps/v1kind:Deploymentmetadata:name:nginxlabels:app:nginxspec:replicas:3selector:matchLabels:app:nginxtemplate:metadata:labels:app:nginxspec:containers:-name:nginximage:nginx:1.20.1ports:-containerPort:80---apiVersion:v1kind:Servicemetadata:name:nginxspec:type:ExternalNameexternalName:www.baidu.com2.5.2 运行并查看状态

kubectl apply -f second.yml

kubectl get svc

2.5.3 验证

我们进入到容器内部

[root@master-01 k8s]# kubectl exec -it nginx-58b9b8ff79-hj4gv – bash

使用nslookup工具查看是否会跳转

nslookup nginx

证明,采用ExternalName模式,在居群内部访问服务名,会跳转到我们设置好的地址中。

2.6 Ingress NGINX

[root@k8s-80 k8syaml]# kubectl delete -f nginx.yaml # 删除 nginx.yaml 文件中定义的类型和名称的 pod,全干掉deployment.apps "nginx-deployment" deletedservice "nginx" deleted官方的yaml文件,我把其中的镜像拉下来放置在阿里云上并进行对应修改,请根据这个https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.4.0/deploy/static/provider/baremetal/deploy.yaml修改

ingress-nginxapiVersion: v1kind: Namespacemetadata: labels: app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx name: ingress-nginx---apiVersion: v1automountServiceAccountToken: truekind: ServiceAccountmetadata: labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.4.0 name: ingress-nginx namespace: ingress-nginx---apiVersion: v1kind: ServiceAccountmetadata: labels: app.kubernetes.io/component: admission-webhook app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.4.0 name: ingress-nginx-admission namespace: ingress-nginx---apiVersion: rbac.authorization.k8s.io/v1kind: Rolemetadata: labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.4.0 name: ingress-nginx namespace: ingress-nginxrules:- apiGroups: - "" resources: - namespaces verbs: - get- apiGroups: - "" resources: - configmaps - pods - secrets - endpoints verbs: - get - list - watch- apiGroups: - "" resources: - services verbs: - get - list - watch- apiGroups: - networking.k8s.io resources: - ingresses verbs: - get - list - watch- apiGroups: - networking.k8s.io resources: - ingresses/status verbs: - update- apiGroups: - networking.k8s.io resources: - ingressclasses verbs: - get - list - watch- apiGroups: - "" resourceNames: - ingress-controller-leader resources: - configmaps verbs: - get - update- apiGroups: - "" resources: - configmaps verbs: - create- apiGroups: - coordination.k8s.io resourceNames: - ingress-controller-leader resources: - leases verbs: - get - update- apiGroups: - coordination.k8s.io resources: - leases verbs: - create- apiGroups: - "" resources: - events verbs: - create - patch- apiGroups: - discovery.k8s.io resources: - endpointslices verbs: - list - watch - get---apiVersion: rbac.authorization.k8s.io/v1kind: Rolemetadata: labels: app.kubernetes.io/component: admission-webhook app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.4.0 name: ingress-nginx-admission namespace: ingress-nginxrules:- apiGroups: - "" resources: - secrets verbs: - get - create---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRolemetadata: labels: app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.4.0 name: ingress-nginxrules:- apiGroups: - "" resources: - configmaps - endpoints - nodes - pods - secrets - namespaces verbs: - list - watch- apiGroups: - coordination.k8s.io resources: - leases verbs: - list - watch- apiGroups: - "" resources: - nodes verbs: - get- apiGroups: - "" resources: - services verbs: - get - list - watch- apiGroups: - networking.k8s.io resources: - ingresses verbs: - get - list - watch- apiGroups: - "" resources: - events verbs: - create - patch- apiGroups: - networking.k8s.io resources: - ingresses/status verbs: - update- apiGroups: - networking.k8s.io resources: - ingressclasses verbs: - get - list - watch- apiGroups: - discovery.k8s.io resources: - endpointslices verbs: - list - watch - get---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRolemetadata: labels: app.kubernetes.io/component: admission-webhook app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.4.0 name: ingress-nginx-admissionrules:- apiGroups: - admissionregistration.k8s.io resources: - validatingwebhookconfigurations verbs: - get - update---apiVersion: rbac.authorization.k8s.io/v1kind: RoleBindingmetadata: labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.4.0 name: ingress-nginx namespace: ingress-nginxroleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: ingress-nginxsubjects:- kind: ServiceAccount name: ingress-nginx namespace: ingress-nginx---apiVersion: rbac.authorization.k8s.io/v1kind: RoleBindingmetadata: labels: app.kubernetes.io/component: admission-webhook app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.4.0 name: ingress-nginx-admission namespace: ingress-nginxroleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: ingress-nginx-admissionsubjects:- kind: ServiceAccount name: ingress-nginx-admission namespace: ingress-nginx---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata: labels: app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.4.0 name: ingress-nginxroleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: ingress-nginxsubjects:- kind: ServiceAccount name: ingress-nginx namespace: ingress-nginx---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata: labels: app.kubernetes.io/component: admission-webhook app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.4.0 name: ingress-nginx-admissionroleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: ingress-nginx-admissionsubjects:- kind: ServiceAccount name: ingress-nginx-admission namespace: ingress-nginx---apiVersion: v1data: allow-snippet-annotations: "true"kind: ConfigMapmetadata: labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.4.0 name: ingress-nginx-controller namespace: ingress-nginx---apiVersion: v1kind: Servicemetadata: labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.4.0 name: ingress-nginx-controller namespace: ingress-nginxspec: ipFamilies: - IPv4 ipFamilyPolicy: SingleStack ports: - appProtocol: http name: http port: 80 protocol: TCP targetPort: http - appProtocol: https name: https port: 443 protocol: TCP targetPort: https selector: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx type: NodePort---apiVersion: v1kind: Servicemetadata: labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.4.0 name: ingress-nginx-controller-admission namespace: ingress-nginxspec: ports: - appProtocol: https name: https-webhook port: 443 targetPort: webhook selector: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx type: ClusterIP---apiVersion: apps/v1kind: Deploymentmetadata: labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.4.0 name: ingress-nginx-controller namespace: ingress-nginxspec: minReadySeconds: 0 revisionHistoryLimit: 10 selector: matchLabels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx template: metadata: labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx spec: containers: - args: - /nginx-ingress-controller - --election-id=ingress-controller-leader - --controller-class=k8s.io/ingress-nginx - --ingress-class=nginx - --configmap=$(POD_NAMESPACE)/ingress-nginx-controller - --validating-webhook=:8443 - --validating-webhook-certificate=/usr/local/certificates/cert - --validating-webhook-key=/usr/local/certificates/key env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace - name: LD_PRELOAD value: /usr/local/lib/libmimalloc.so image: registry.k8s.io/ingress-nginx/controller:v1.4.0@sha256:34ee929b111ffc7aa426ffd409af44da48e5a0eea1eb2207994d9e0c0882d143 imagePullPolicy: IfNotPresent lifecycle: preStop: exec: command: - /wait-shutdown livenessProbe: failureThreshold: 5 httpGet: path: /healthz port: 10254 scheme: HTTP initialDelaySeconds: 10 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 1 name: controller ports: - containerPort: 80 name: http protocol: TCP - containerPort: 443 name: https protocol: TCP - containerPort: 8443 name: webhook protocol: TCP readinessProbe: failureThreshold: 3 httpGet: path: /healthz port: 10254 scheme: HTTP initialDelaySeconds: 10 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 1 resources: requests: cpu: 100m memory: 90Mi securityContext: allowPrivilegeEscalation: true capabilities: add: - NET_BIND_SERVICE drop: - ALL runAsUser: 101 volumeMounts: - mountPath: /usr/local/certificates/ name: webhook-cert readOnly: true dnsPolicy: ClusterFirst nodeSelector: kubernetes.io/os: linux serviceAccountName: ingress-nginx terminationGracePeriodSeconds: 300 volumes: - name: webhook-cert secret: secretName: ingress-nginx-admission---apiVersion: batch/v1kind: Jobmetadata: labels: app.kubernetes.io/component: admission-webhook app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.4.0 name: ingress-nginx-admission-create namespace: ingress-nginxspec: template: metadata: labels: app.kubernetes.io/component: admission-webhook app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.4.0 name: ingress-nginx-admission-create spec: containers: - args: - create - --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc - --namespace=$(POD_NAMESPACE) - --secret-name=ingress-nginx-admission env: - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace image: registry.k8s.io/ingress-nginx/kube-webhook-certgen:v20220916-gd32f8c343@sha256:39c5b2e3310dc4264d638ad28d9d1d96c4cbb2b2dcfb52368fe4e3c63f61e10f imagePullPolicy: IfNotPresent name: create securityContext: allowPrivilegeEscalation: false nodeSelector: kubernetes.io/os: linux restartPolicy: OnFailure securityContext: fsGroup: 2000 runAsNonRoot: true runAsUser: 2000 serviceAccountName: ingress-nginx-admission---apiVersion: batch/v1kind: Jobmetadata: labels: app.kubernetes.io/component: admission-webhook app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.4.0 name: ingress-nginx-admission-patch namespace: ingress-nginxspec: template: metadata: labels: app.kubernetes.io/component: admission-webhook app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.4.0 name: ingress-nginx-admission-patch spec: containers: - args: - patch - --webhook-name=ingress-nginx-admission - --namespace=$(POD_NAMESPACE) - --patch-mutating=false - --secret-name=ingress-nginx-admission - --patch-failure-policy=Fail env: - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace image: registry.k8s.io/ingress-nginx/kube-webhook-certgen:v20220916-gd32f8c343@sha256:39c5b2e3310dc4264d638ad28d9d1d96c4cbb2b2dcfb52368fe4e3c63f61e10f imagePullPolicy: IfNotPresent name: patch securityContext: allowPrivilegeEscalation: false nodeSelector: kubernetes.io/os: linux restartPolicy: OnFailure securityContext: fsGroup: 2000 runAsNonRoot: true runAsUser: 2000 serviceAccountName: ingress-nginx-admission---apiVersion: networking.k8s.io/v1kind: IngressClassmetadata: labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.4.0 name: nginxspec: controller: k8s.io/ingress-nginx---apiVersion: admissionregistration.k8s.io/v1kind: ValidatingWebhookConfigurationmetadata: labels: app.kubernetes.io/component: admission-webhook app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.4.0 name: ingress-nginx-admissionwebhooks:- admissionReviewVersions: - v1 clientConfig: service: name: ingress-nginx-controller-admission namespace: ingress-nginx path: /networking/v1/ingresses failurePolicy: Fail matchPolicy: Equivalent name: validate.nginx.ingress.kubernetes.io rules: - apiGroups: - networking.k8s.io apiVersions: - v1 operations: - CREATE - UPDATE resources: - ingresses sideEffects: Nonemaster

[root@k8s-80 k8syaml]# kubectl apply -f ingress-nginx.yml[root@k8s-80 k8syaml]# kubectl get po -n ingress-nginxNAME READY STATUS RESTARTS AGEingress-nginx-admission-create-wjb9d 0/1 Completed 0 12singress-nginx-admission-patch-s9pc8 0/1 Completed 0 12singress-nginx-controller-6b548d5677-t42qc 0/1 ContainerCreating 0 12s注:如果拉不下来,就使用阿里云仓库docker pull registry.cn-guangzhou.aliyuncs.com/testbydocker/webhook-certgen:v20220916docker pull registry.cn-guangzhou.aliyuncs.com/testbydocker/controller_ingress:v1.4.0建议修改yaml文件内的image[root@k8s-80 k8syaml]# kubectl describe po ingress-nginx-admission-create-wjb9d -n ingress-nginx[root@k8s-80 k8syaml]# kubectl get po -n ingress-nginx # admission是密钥不用管NAME READY STATUS RESTARTS AGEingress-nginx-admission-create-wjb9d 0/1 Completed 0 48singress-nginx-admission-patch-s9pc8 0/1 Completed 0 48singress-nginx-controller-6b548d5677-t42qc 1/1 Running 0 48s[root@k8s-80 k8syaml]# kubectl get svc -n ingress-nginxNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEingress-nginx-controller NodePort 10.101.104.205 <none> 80:30799/TCP,443:31656/TCP 66singress-nginx-controller-admission ClusterIP 10.107.116.128 <none> 443/TCP 66s测试

[root@k8s-80 k8syaml]# vim nginx.yaml # 更换镜像源,这里使用的是阿里云拉取下来的镜像registry.cn-shenzhen.aliyuncs.com/adif0028/nginx_php:74v3[root@k8s-80 k8syaml]# kubectl apply -f nginx.yaml[root@k8s-80 k8syaml]# kubectl get poNAME READY STATUS RESTARTS AGEnginx-b65884ff7-24f4j 1/1 Running 0 49snginx-b65884ff7-8qss6 1/1 Running 0 49snginx-b65884ff7-vhnbt 1/1 Running 0 49s[root@k8s-80 k8syaml]# kubectl exec -it nginx-b65884ff7-24f4j -- bash[root@nginx-b65884ff7-24f4j /]# yum provides nslookup[root@nginx-b65884ff7-24f4j /]# yum -y install bind-utils[root@nginx-b65884ff7-24f4j /]# nslookup kubernetesServer:10.96.0.10Address:10.96.0.10#53Name:kubernetes.default.svc.cluster.localAddress: 10.96.0.1[root@nginx-b65884ff7-24f4j /]# nslookup nginxServer:10.96.0.10Address:10.96.0.10#53Name:nginx.default.svc.cluster.localAddress: 10.111.201.41[root@nginx-b65884ff7-24f4j /]# curl nginx.default.svc.cluster.local # 是正常输出html[root@nginx-b65884ff7-24f4j /]# curl nginx # 也能访问,其实访问的就是service的名字开启集群模式

# 把Service里的type和nodePort注释掉[root@k8s-80 k8syaml]# vim nginx.yamlapiVersion: apps/v1kind: Deploymentmetadata: name: nginx labels: app: nginxspec: replicas: 3 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: registry.cn-shenzhen.aliyuncs.com/adif0028/nginx_php:74v3 ports: - containerPort: 80---apiVersion: v1kind: Servicemetadata: name: nginxspec: #type: NodePort selector: app: nginx ports: - name: http protocol: TCP port: 80 targetPort: 80 #nodePort: 30005[root@k8s-80 k8syaml]# kubectl apply -f nginx.yaml[root@k8s-80 k8syaml]# kubectl exec -it nginx-b65884ff7-24f4j -- bash[root@nginx-b65884ff7-24f4j /]# curl nginx # 是从内部解析的# 另开一个终端[root@k8s-80 /]# kubectl get svc # 可以看到其实解析的就是name(nginx),当使用无头服务的时候就是使用CLUSTER-IPNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes ClusterIP 10.96.0.1 <none> 443/TCP 30mnginx ClusterIP 10.111.201.41 <none> 80/TCP 4m59s[root@k8s-80 /]# kubectl get svc -n ingress-nginx # 查看Service暴露的端口NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEingress-nginx-controller NodePort 10.101.104.205 <none> 80:30799/TCP,443:31656/TCP 7m13singress-nginx-controller-admission ClusterIP 10.107.116.128 <none> 443/TCP 7m13s[root@nginx-b65884ff7-24f4j /]# exit开启ingress

[root@k8s-80 /]# kubectl explain ing # 查看VERSION[root@k8s-80 k8syaml]# vi nginx.yaml # 增加了kind: Ingress那一项匹配值apiVersion: apps/v1kind: Deploymentmetadata: name: nginx labels: app: nginxspec: replicas: 3 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: registry.cn-shenzhen.aliyuncs.com/adif0028/nginx_php:74v3 ports: - containerPort: 80---apiVersion: v1kind: Servicemetadata: name: nginxspec: #type: NodePort selector: app: nginx ports: - name: http protocol: TCP port: 80 targetPort: 80 #nodePort: 30005---apiVersion: networking.k8s.io/v1kind: Ingressmetadata: name: nginxspec: ingressClassName: nginx rules: - host: www.lin.com http: paths: - path: / pathType: Prefix backend: service: name: nginx port: number: 80[root@k8s-80 k8syaml]# kubectl apply -f nginx.yaml[root@k8s-80 k8syaml]# kubectl get poNAME READY STATUS RESTARTS AGEnginx-b65884ff7-24f4j 1/1 Running 0 6m38snginx-b65884ff7-8qss6 1/1 Running 0 6m38snginx-b65884ff7-vhnbt 1/1 Running 0 6m38s# 修改成php页面[root@k8s-80 k8syaml]# kubectl exec -it nginx-b65884ff7-24f4j -- bash[root@nginx-b65884ff7-24f4j /]# mv /usr/local/nginx/html/index.html /usr/local/nginx/html/index.php [root@nginx-b65884ff7-24f4j /]# >/usr/local/nginx/html/index.php[root@nginx-b65884ff7-24f4j /]# vi /usr/local/nginx/html/index.php <?phpinfo();?>[root@nginx-b65884ff7-24f4j /]# /etc/init.d/php-fpm restartGracefully shutting down php-fpm . doneStarting php-fpm done[root@nginx-b65884ff7-24f4j /]# exitexit# 其余两个副本皆是如此操作# 修改Windows上的host文件,做好解析 192.168.188.80 www.lin.com# 浏览器访问域名也只能是域名,但是需要加端口号 ==> www.lin.com:30799,会出现PHP的页面# 不知道端口号的,使用命令kubectl get svc -n ingress-nginx

反代

-

安装nginx

选择在master上安装,因为此时master压力较小

# 这里是使用脚本# 请注意配置 Kubernetes 源使用阿里源当时说明了由于官网未开放同步方式, 可能会有索引gpg检查失败的情况# 所以需要先执行这两条命令[root@k8s-80 ~]# sed -i "s#repo_gpgcheck=1#repo_gpgcheck=0#g" /etc/yum.repos.d/kubernetes.repo[root@k8s-80 ~]# sed -i "s#gpgcheck=1#gpgcheck=0#g" /etc/yum.repos.d/kubernetes.repo[root@k8s-80 ~]# sh nginx.sh -

修改配置文件实现四层转发

[root@k8s-80 ~]# cp /usr/local/nginx/conf/nginx.conf /usr/local/nginx/conf/nginx.conf.bak[root@k8s-80 /]# grep -Ev "#|^$" /usr/local/nginx/conf/nginx.confworker_processes 1;events { worker_connections 1024;}stream { upstream tcp_proxy { server 192.168.188.80:30799; } server { listen 80; proxy_pass tcp_proxy; }}http { include mime.types; default_type application/octet-stream; sendfile on; keepalive_timeout 65;}[root@k8s-80 config]# nginx -tnginx: the configuration file /usr/local/nginx/conf/nginx.conf syntax is oknginx: configuration file /usr/local/nginx/conf/nginx.conf test is successful[root@k8s-80 config]# nginx -s reload# 设置开机自启[root@k8s-80 config]# vim /etc/rc.local 添加一条命令nginx[root@k8s-80 config]# chmod +x /etc/rc.d/rc.local# 浏览器验证,输入www.lin.com

标签

第一种pod打标签

# 获取pod信息,默认是default名称空间,并查看附加信息【如:pod的IP及在哪个节点运行】[root@k8s-80 ~]# kubectl get po -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESnginx-b65884ff7-24f4j 1/1 Running 2 (142m ago) 6h10m 10.244.1.12 k8s-81 <none> <none>nginx-b65884ff7-8qss6 1/1 Running 2 (142m ago) 6h10m 10.244.2.15 k8s-82 <none> <none>nginx-b65884ff7-vhnbt 1/1 Running 2 (142m ago) 6h10m 10.244.1.13 k8s-81 <none> <none>[root@k8s-80 ~]# kubectl get poNAME READY STATUS RESTARTS AGEnginx-b65884ff7-24f4j 1/1 Running 2 (142m ago) 6h10mnginx-b65884ff7-8qss6 1/1 Running 2 (142m ago) 6h10mnginx-b65884ff7-vhnbt 1/1 Running 2 (142m ago) 6h10m# 查看所有pod的标签[root@k8s-80 ~]# kubectl get po --show-labelsNAME READY STATUS RESTARTS AGE LABELSnginx-b65884ff7-24f4j 1/1 Running 2 (155m ago) 6h23m app=nginx,pod-template-hash=b65884ff7nginx-b65884ff7-8qss6 1/1 Running 2 (155m ago) 6h23m app=nginx,pod-template-hash=b65884ff7nginx-b65884ff7-vhnbt 1/1 Running 2 (155m ago) 6h23m app=nginx,pod-template-hash=b65884ff7# 打标签方法1:使用kubectl edit pod nginx-b65884ff7-24f4j方法2:[root@k8s-80 ~]# kubectl label po nginx-b65884ff7-24f4j uplookingdev=shypod/nginx-b65884ff7-24f4j labeled[root@k8s-80 ~]# kubectl get po --show-labelsNAME READY STATUS RESTARTS AGE LABELSnginx-b65884ff7-24f4j 1/1 Running 2 (157m ago) 6h25m app=nginx,pod-template-hash=b65884ff7,uplookingdev=shynginx-b65884ff7-8qss6 1/1 Running 2 (157m ago) 6h25m app=nginx,pod-template-hash=b65884ff7nginx-b65884ff7-vhnbt 1/1 Running 2 (157m ago) 6h25m app=nginx,pod-template-hash=b65884ff7删除标签

[root@k8s-80 ~]# kubectl label po nginx-b65884ff7-24f4j uplookingdev-pod/nginx-b65884ff7-24f4j labeled[root@k8s-80 ~]# kubectl get po --show-labelsNAME READY STATUS RESTARTS AGE LABELSnginx-b65884ff7-24f4j 1/1 Running 2 (158m ago) 6h26m app=nginx,pod-template-hash=b65884ff7nginx-b65884ff7-8qss6 1/1 Running 2 (158m ago) 6h26m app=nginx,pod-template-hash=b65884ff7nginx-b65884ff7-vhnbt 1/1 Running 2 (158m ago) 6h26m app=nginx,pod-template-hash=b65884ff7第二种svc节点打标签方式

[root@k8s-master ~]# kubectl get svc --show-labelsNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE LABELSkubernetes ClusterIP 10.96.0.1 <none> 443/TCP 22h component=apiserver,provider=kubernetes[root@k8s-master ~]# kubectl label svc kubernetes today=happyservice/kubernetes labeled[root@k8s-master ~]# kubectl get svc --show-labelsNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE LABELSkubernetes ClusterIP 10.96.0.1 <none> 443/TCP 22h component=apiserver,provider=kubernetes,today=happy第三种namespace节点打标签方式

[root@k8s-master ~]# kubectl label ns default today=happynamespace/default labeled[root@k8s-master ~]# kubectl get ns --show-labelsNAME STATUS AGE LABELSdefault Active 22h kubernetes.io/metadata.name=default,today=happyingress-nginx Active 14m app.kubernetes.io/instance=ingress-nginx,app.kubernetes.io/name=ingress-nginx,kubernetes.io/metadata.name=ingress-nginxkube-flannel Active 19h kubernetes.io/metadata.name=kube-flannel,pod-security.kubernetes.io/enforce=privilegedkube-node-lease Active 22h kubernetes.io/metadata.name=kube-node-leasekube-public Active 22h kubernetes.io/metadata.name=kube-publickube-system Active 22h kubernetes.io/metadata.name=kube-system第四种node节点打标签方式

[root@k8s-80 ~]# kubectl get noNAME STATUS ROLES AGE VERSIONk8s-80 Ready control-plane,master 6h52m v1.22.3k8s-81 Ready <none> 6h52m v1.22.3k8s-82 Ready <none> 6h52m v1.22.3[root@k8s-80 ~]# kubectl label no k8s-81 node-role.kubernetes.io/php=truenode/k8s-81 labeled[root@k8s-80 ~]# kubectl label no k8s-81 node-role.kubernetes.io/bus=truenode/k8s-81 labeled[root@k8s-80 ~]# kubectl label no k8s-82 node-role.kubernetes.io/go=truenode/k8s-82 labeled[root@k8s-80 ~]# kubectl label no k8s-82 node-role.kubernetes.io/bus=truenode/k8s-82 labeled[root@k8s-80 ~]# kubectl get noNAME STATUS ROLES AGE VERSIONk8s-80 Ready control-plane,master 6h56m v1.22.3k8s-81 Ready bus,php 6h55m v1.22.3k8s-82 Ready bus,go 6h55m v1.22.3给节点打标签设定某些pod运行在特定节点:kubectl label no $node node-role.kubernetes.io/$mark=true $node为节点名字 $mark为你要打的标签将某些特定的pod调度到某个标签的节点时,在yaml文件里写入标签选择:spec: (第二个spec) nodeSelector: node-role.kubernetes.io/$mark: "true" kubectl history

# 查看之前推出的版本(历史版本)[root@k8s-80 k8syaml]# kubectl rollout history deploymentdeployment.apps/nginx REVISION CHANGE-CAUSE1 <none>[root@k8s-80 k8syaml]# kubectl rollout history deployment --revision=1 # 查看deployment修订版1的详细信息deployment.apps/nginx with revision #1Pod Template: Labels:app=nginxpod-template-hash=b65884ff7 Containers: nginx: Image:registry.cn-shenzhen.aliyuncs.com/adif0028/nginx_php:74v3 Port:80/TCP Host Port:0/TCP Environment:<none> Mounts:<none> Volumes:<none>回滚

# 回滚前一rollout# undo[root@k8s-80 k8syaml]# kubectl rollout undo deployment nginx#默认回滚上一个版本# 回滚到指定版本[root@k8s-80 k8syaml]# kubectl rollout undo deployment nginx --to-revision=版本号更新

支持热更新

[root@k8s-80 k8syaml]# kubectl set image deployment nginx nginx=1.20.1#需要仓库有镜像deployment.apps/nginx image updated[root@k8s-80 k8syaml]# kubectl get poNAME READY STATUS RESTARTS AGEnginx-b65884ff7-8qss6 1/1 Running 2 (3h5m ago) 6h53mnginx-bc779cc7c-jxh87 0/1 ContainerCreating 0 9s[root@k8s-80 k8syaml]# kubectl edit deployment nginx # 进去看到版本号就是1.20.1# 修改成2个副本集# 镜像修改成nginx:1.20.1扩容

当业务的用户越来越多,目前的后端服务已经无法满足业务要求当前的业务要求,传统的解决办法是将其横向增加服务器,从而满足我们的业务要求。K8S 中也是支持横向扩容的方法的。

[root@k8s-80 k8syaml]# kubectl get poNAME READY STATUS RESTARTS AGEnginx-58b9b8ff79-2nc2q 1/1 Running 0 4m59snginx-58b9b8ff79-rzx5c 1/1 Running 0 4m14s[root@k8s-80 k8syaml]# kubectl scale deployment nginx --replicas=5 # 横向扩容5个deployment.apps/nginx scaled[root@k8s-80 k8syaml]# kubectl get poNAME READY STATUS RESTARTS AGEnginx-58b9b8ff79-2nc2q 1/1 Running 0 7m11snginx-58b9b8ff79-f6g6x 1/1 Running 0 2snginx-58b9b8ff79-m7n9b 1/1 Running 0 2snginx-58b9b8ff79-rzx5c 1/1 Running 0 6m26snginx-58b9b8ff79-s6qtx 1/1 Running 0 2s# 第二种扩容 Patch (少用)[root@k8s-80 k8syaml]# kubectl path deployment nginx -p '{"spec":{"replicas":6}}'缩容

[root@k8s-80 k8syaml]# kubectl scale deployment nginx --replicas=3deployment.apps/nginx scaled[root@k8s-80 k8syaml]# kubectl get poNAME READY STATUS RESTARTS AGEnginx-58b9b8ff79-2nc2q 1/1 Running 0 9m45snginx-58b9b8ff79-m7n9b 1/1 Running 0 2m36snginx-58b9b8ff79-rzx5c 1/1 Running 0 9m# 还可以比yaml文件里面设置的副本集数还要少,因为yaml文件里面的只是期望值并不是强制暂停部署(少用)

[root@k8s-80 k8syaml]# kubectl rollout pause deployment nginx取消暂停,开始部署(少用)

[root@k8s-80 k8syaml]# kubectl rollout resume deployment nginx服务探针(重点)

对线上业务来说,保证服务的正常稳定是重中之重,对故障服务的及时处理避免影响业务以及快速恢复一直是开发运维的难点。Kubernetes 提供了健康检查服务,对于检测到故障服务会被及时自动下线,以及通过重启服务的方式使服务自动恢复。

存活性探测(LivenessProbe)

用于判断容器是否存活,即 Pod 是否为 running 状态,如果 LivenessProbe 探针探测到容器不健康,则kubelet将 kill 掉容器,并根据容器的重启策略判断按照那种方式重启,如果一个容器不包含LivenessProbe 探针,则Kubelet认为容器的 LivenessProbe 探针的返回值永远成功。

存活性探测支持的方法有三种:ExecAction,TCPSocketAction,HTTPGetAction。

Exec(命令)

这个稳定一点,一般选择这个

[root@k8s-80 k8syaml]# vim nginx.yamlapiVersion: apps/v1kind: Deploymentmetadata: name: nginx labels: app: nginxspec: replicas: 3 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: nginx:1.18.0 livenessProbe: exec: command: - cat - /opt/a.txt #也可以写成: #- cat /opt/a.txt #也可以写成脚本形式: #- /bin/sh #- -c #- touch /tmp/healthy; sleep 30; rm -f /tmp/healthy; sleep 600 initialDelaySeconds: 5 timeoutSeconds: 1 ports: - containerPort: 80# 肯定报错,因为没有/opt/a.txt这个文件[root@k8s-80 k8syaml]# kubectl apply -f nginx.yaml deployment.apps/nginx configuredservice/nginx configuredingress.networking.k8s.io/nginx unchanged[root@k8s-80 k8syaml]# kubectl get poNAME READY STATUS RESTARTS AGEnginx-58b9b8ff79-rzx5c 1/1 Terminating 0 21mnginx-74cd54c6d8-6s9xq 1/1 Running 0 6snginx-74cd54c6d8-8kk86 1/1 Running 0 5snginx-74cd54c6d8-g6fx2 1/1 Running 0 3s[root@k8s-80 k8syaml]# kubectl describe po nginx-58b9b8ff79-rzx5c # 会看到提示说重启[root@k8s-80 k8syaml]# kubectl get po # 可以看到RESTARTS的次数NAME READY STATUS RESTARTS AGEnginx-74cd54c6d8-6s9xq 1/1 Running 2 (60s ago) 3mnginx-74cd54c6d8-8kk86 1/1 Running 2 (58s ago) 2m59snginx-74cd54c6d8-g6fx2 1/1 Running 2 (57s ago) 2m57s使用正确的条件

# 把 nginx.yaml中的/opt/a.txt# 修改成 /usr/share/nginx/html/index.html[root@k8s-80 k8syaml]# kubectl apply -f nginx.yaml deployment.apps/nginx configuredservice/nginx unchangedingress.networking.k8s.io/nginx unchanged[root@k8s-80 k8syaml]# kubectl get poNAME READY STATUS RESTARTS AGEnginx-cf85cd887-cwv9t 1/1 Running 0 15snginx-cf85cd887-m4krx 1/1 Running 0 13snginx-cf85cd887-xgmhv 1/1 Running 0 12s[root@k8s-80 k8syaml]# kubectl describe po nginx-cf85cd887-cwv9t # 看详细信息[root@k8s-80 k8syaml]# kubectl get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes ClusterIP 10.96.0.1 <none> 443/TCP 7h54mnginx ClusterIP 10.105.11.148 <none> 80/TCP 7h28m[root@k8s-80 k8syaml]# kubectl get ing # 查看ingressNAME CLASS HOSTS ADDRESS PORTS AGEnginx nginx www.lin.com 192.168.188.81 80 7h22m# 192.168.188.81就是Ingress controller为了实现Ingress而分配的IP地址。RULE列表示所有发送给该IP的流量都被转发到了BACKEND所列的Kubernetes service上[root@k8s-80 k8syaml]# kubectl get svc -n ingress-nginxNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEingress-nginx-controller NodePort 10.101.104.205 <none> 80:30799/TCP,443:31656/TCP 7h32mingress-nginx-controller-admission ClusterIP 10.107.116.128 <none> 443/TCP# 此时浏览器打开正常访问第二种使用条件

# 把 nginx.yaml中的- cat- /usr/local/nginx/html/index.html# 修改成 - /bin/sh- -c- nginx -t[root@k8s-80 k8syaml]# kubectl apply -f nginx.yaml[root@k8s-80 k8syaml]# kubectl get poNAME READY STATUS RESTARTS AGEnginx-fcf47cfcc-6txxf 1/1 Running 0 41snginx-fcf47cfcc-fd5qc 1/1 Running 0 43snginx-fcf47cfcc-x2qzg 1/1 Running 0 46s[root@k8s-80 k8syaml]# kubectl describe po nginx-fcf47cfcc-6txxf # 查看详细信息# 浏览器打开页面正常输出# 如果不加就绪探针和健康检查,有可能状态是running但不能服务,所以一般两种探针都加TCPSocket

[root@k8s-80 k8syaml]# cp nginx.yaml f-tcpsocket.yaml[root@k8s-80 k8syaml]# vim f-tcpsocket.yamlapiVersion: apps/v1kind: Deploymentmetadata: name: nginx labels: app: nginxspec: replicas: 3 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: nginx:1.18.0 livenessProbe: tcpSocket: port: 80 initialDelaySeconds: 5 timeoutSeconds: 1 ports: - containerPort: 80[root@k8s-80 k8syaml]# kubectl apply -f f-tcpsocket.yaml[root@k8s-80 k8syaml]# kubectl get poNAME READY STATUS RESTARTS AGEnginx-5f48dd7bb9-6crdg 1/1 Running 0 48snginx-5f48dd7bb9-t7q9k 1/1 Running 0 47snginx-5f48dd7bb9-tcn2d 1/1 Running 0 49s[root@k8s-80 k8syaml]# kubectl describe po nginx-5f48dd7bb9-6crdg # 查看详细信息无误HTTPGet

# 修改f-tcpsocket.yaml里面的livenessProbe内容# 修改成livenessProbe: httpGet: path: / port: 80 host: 127.0.0.1#换算成http://127.0.0.1:80 scheme: HTTP [root@k8s-80 k8syaml]# kubectl apply -f f-tcpsocket.yaml[root@k8s-80 k8syaml]# kubectl get poNAME READY STATUS RESTARTS AGEnginx-6795fb5766-2fj42 1/1 Running 0 38snginx-6795fb5766-dj75j 1/1 Running 0 38snginx-6795fb5766-wblxq 1/1 Running 0 36s就绪性探测

用于判断容器是否正常提供服务,即容器的 Ready 是否为 True,是否可以接收请求,如果 ReadinessProbe 探测失败,则容器的 Ready 将设置为 False,控制器将此 Pod 的 Endpoint 从对应的 service 的 Endpoint 列表中移除, 从此不再将任何请求调度此 Pod 上,直到下次探测成功。(剔除此 pod,不参与接收请求不会将流量转发给此 Pod)

这里类型是与存活性探测是一样的,但是参数不一样;这里选择监听port方式好一点

HTTPGet

通过访问某个 URL 的方式探测当前 POD 是否可以正常对外提供服务。

# 写一个会报错的,实现手动回收资源[root@k8s-80 k8syaml]# vim nginx.yaml# 在livenessProbe配置下添加readinessProbe;与livenessProbe同级livenessProbe: exec: command: - /bin/sh - -c - nginx -t initialDelaySeconds: 5 timeoutSeconds: 1readinessProbe: httpGet: port: 80 path: /demo.html # 把异常的无法启动的pod删除[root@k8s-80 k8syaml]# kubectl get poNAME READY STATUS RESTARTS AGEnginx-6795fb5766-2fj42 0/1 CrashLoopBackOff 12 (117s ago) 98mnginx-6795fb5766-dj75j 0/1 CrashLoopBackOff 12 (113s ago) 98mnginx-6795fb5766-wblxq 0/1 CrashLoopBackOff 12 (113s ago) 98mnginx-75b7449cdd-cjxq6 0/1 Running 0 44s# kubectl delete po NAME 手动资源回收[root@k8s-80 k8syaml]# kubectl delete po nginx-6795fb5766-2fj42pod "nginx-6795fb5766-2fj42" deleted[root@k8s-80 k8syaml]# kubectl delete po nginx-6795fb5766-dj75jpod "nginx-6795fb5766-dj75j" deleted[root@k8s-80 k8syaml]# kubectl delete po nginx-6795fb5766-wblxqpod "nginx-6795fb5766-wblxq" deleted[root@k8s-80 k8syaml]# kubectl get poNAME READY STATUS RESTARTS AGEnginx-6795fb5766-grb2w 1/1 Running 0 3snginx-6795fb5766-r9ktt 1/1 Running 0 12snginx-6795fb5766-z2hxg 1/1 Running 0 19snginx-75b7449cdd-cjxq6 0/1 Running 1 (32s ago) 92s# 修改readinessProbe: httpGet: port: 80 path: /demo.html # 为readinessProbe: httpGet: port: 80 path: /index.html [root@k8s-80 k8syaml]# kubectl apply -f nginx.yaml [root@k8s-80 k8syaml]# kubectl get poNAME READY STATUS RESTARTS AGEnginx-d997687df-2mrvd 1/1 Running 1 (8s ago) 68snginx-d997687df-gzw4p 1/1 Running 1 (9s ago) 69snginx-d997687df-vk4vn 1/1 Running 1 (2s ago) 62sTCPSocket

通过 ping 某个端口的方式,探测服务是否可以正常对外提供服务。

# nginx.yaml# 修改readinessProbe: httpGet: port: 80 path: /index.html # 为readinessProbe: tcpSocket: port: 80 [root@k8s-80 k8syaml]# kubectl apply -f nginx.yaml[root@k8s-80 k8syaml]# kubectl get poNAME READY STATUS RESTARTS AGEnginx-68b7599f6-dhkvs 1/1 Running 0 32snginx-68b7599f6-zdvtd 1/1 Running 0 34snginx-68b7599f6-zlpjq 1/1 Running 0 33s更具体或者其他看Kubernetes 进阶教程.pdf

K8S 监控组件 metrics-server(重点)

在未安装metrics组件的时候,kubectl top node是无法使用的

根据k8s版本选择好metrics-server版本,有yaml可以下载;这里先下载上传到服务器,查看需要什么镜像而且无法拉取的,使用阿里云帮助拉取;拉取下来后因为这是测试环境,所以添加了“–kubelet-insecure-tls”这个配置,就不会去验证Kubelets提供的服务证书的CA。但是仅用于测试,解释如下图

添加位置如下

# 查找需要什么镜像[root@k8s-80 k8syaml]# cat components.yaml | grep "image" image: k8s.gcr.io/metrics-server/metrics-server:v0.6.1 imagePullPolicy: IfNotPresent # 在阿里云构建拉取替换components.yaml中镜像拉取的地址[root@k8s-master ~]# docker pull registry.cn-guangzhou.aliyuncs.com/uplooking-class2/metrics:v0.6.1[root@k8s-master ~]# docker tag registry.cn-guangzhou.aliyuncs.com/uplooking-class2/metrics:v0.6.1 k8s.gcr.io/metrics-server/metrics-server:v0.6.1注:如果拉取不了镜像,可以指定镜像路径为阿里云仓库[root@k8s-master ~]# vim components.yaml containers: - args: - --cert-dir=/tmp - --secure-port=4443 - --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname - --kubelet-use-node-status-port - --metric-resolution=15s - --kubelet-insecure-tls image: registry.cn-guangzhou.aliyuncs.com/uplooking-class2/metrics:v0.6.1 #image: k8s.gcr.io/metrics-server/metrics-server:v0.6.1 imagePullPolicy: IfNotPresent[root@k8s-80 k8syaml]# kubectl apply -f components.yaml[root@k8s-80 k8syaml]# kubectl get po -n kube-systemNAME READY STATUS RESTARTS AGEcoredns-78fcd69978-d8cv5 1/1 Running 4 (128m ago) 10hcoredns-78fcd69978-qp7f6 1/1 Running 4 (128m ago) 10hetcd-k8s-80 1/1 Running 4 (127m ago) 10hkube-apiserver-k8s-80 1/1 Running 4 (127m ago) 10hkube-controller-manager-k8s-80 1/1 Running 4 (127m ago) 10hkube-flannel-ds-88kmk 1/1 Running 4 (128m ago) 10hkube-flannel-ds-wfvst 1/1 Running 4 (128m ago) 10hkube-flannel-ds-wq2vz 1/1 Running 4 (127m ago) 10hkube-proxy-6t72l 1/1 Running 4 (128m ago) 10hkube-proxy-84vzc 1/1 Running 4 (127m ago) 10hkube-proxy-dllpx 1/1 Running 4 (128m ago) 10hkube-scheduler-k8s-80 1/1 Running 4 (127m ago) 10hmetrics-server-6d54b97f-qwcqg 1/1 Running 2 (128m ago) 8h[root@k8s-80 k8syaml]# kubectl top nodeNAME CPU(cores) CPU% MEMORY(bytes) MEMORY% k8s-80 155m 7% 924Mi 49% k8s-81 34m 3% 536Mi 28% k8s-82 46m 4% 515Mi 27%[root@k8s-80 k8syaml]# kubectl top podNAME CPU(cores) MEMORY(bytes) nginx-68b7599f6-fs6rp 4m 147Mi nginx-68b7599f6-wzffm 7m 147Mi创建HPA

在生产环境中,总会有一些意想不到的事情发生,比如公司网站流量突然升高,此时之前创建的 Pod 已不足 以撑住所有的访问,而运维人员也不可能 24 小时守着业务服务,这时就可以通过配置 HPA,实现负载过高的情 况下自动扩容 Pod 副本数以分摊高并发的流量,当流量恢复正常后,HPA 会自动缩减 Pod 的数量。HPA 是根据 CPU 的使用率、内存使用率自动扩展 Pod 数量的,所以要使用 HPA 就必须定义 Requests 参数

[root@k8s-80 k8syaml]# vi nginx.yaml# 添加以下内容---apiVersion: autoscaling/v2beta1apiVersion: autoscaling/v1kind: HorizontalPodAutoscalermetadata: name: nginxspec: scaleTargetRef: apiVersion: apps/v1 name: nginx kind: Deployment minReplicas: 2 maxReplicas: 10 metrics: - type: Resource resource: name: cpu targetAverageUtilization: 10 # 添加内容二#与livenessProbe同级resources: limits: cpu: 200m memory: 500Mi requests: cpu: 200m memory: 500Mi # 生产中至少要2个pod保证高可用# 但是生产上建议设置一致防止出现问题Deployment的副本集是个期望值,hpa的副本集是范围值;后面配置我都改成3个保持一致[root@k8s-80 k8syaml]# kubectl explain hpa # 查看apiVersion[root@k8s-80 k8syaml]# kubectl delete -f nginx.yaml[root@k8s-80 k8syaml]# kubectl apply -f nginx.yaml[root@k8s-80 k8syaml]# kubectl get hpaNAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGEnginx Deployment/nginx 0%/10% 2 10 3 3m44s[root@k8s-master ~]# kubectl get podNAME READY STATUS RESTARTS AGEnginx-cb994dfb7-9lxlw 0/1 Pending 0 4snginx-cb994dfb7-t6zjv 0/1 Pending 0 4snginx-cb994dfb7-tdhpb 1/1 Running 0 4s[root@k8s-master ~]# kubectl describe pod nginx-cb994dfb7-9lxlwEvents: Type Reason Age From Message ---- ------ ---- ---- ------- Warning FailedScheduling 38s default-scheduler 0/3 nodes are available: 1 node(s) had taint {node-role.kubernetes.io/master: }, that the pod didn't tolerate, 2 Insufficient memory.注:此报错主要是由于requests数值太多了,导致内存不足,可以将某个些对象的requests的值换成一个更小的数[root@k8s-master ~]# vim nginx.yamlresources: limits: cpu: 200m memory: 200Mi requests: cpu: 200m memory: 200Mi[root@k8s-80 k8syaml]# kubectl get poNAME READY STATUS RESTARTS AGEnginx-77f85b76b9-f66ll 1/1 Running 0 3m55snginx-77f85b76b9-hknv9 1/1 Running 0 3m55snginx-77f85b76b9-n54pp 1/1 Running 0 3m55s# hpa与副本集的数量,谁多谁说了算修改后的nginx.yaml详细

nginx.yamlapiVersion: v1kind: Servicemetadata: name: nginxspec: #type: NodePort selector: app: nginx ports: - name: http protocol: TCP port: 80 targetPort: 80---apiVersion: apps/v1kind: Deploymentmetadata: name: nginx labels: app: nginxspec: replicas: 3 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: nginx:1.18.0 livenessProbe: tcpSocket: port: 80 initialDelaySeconds: 5 timeoutSeconds: 1 readinessProbe: httpGet: port: 80 path: /index.html resources: limits: cpu: 200m memory: 200Mi requests: cpu: 200m memory: 200Mi ports: - containerPort: 80---apiVersion: autoscaling/v2beta1#apiVersion: autoscaling/v1kind: HorizontalPodAutoscalermetadata: name: nginxspec: scaleTargetRef: apiVersion: apps/v1 name: nginx kind: Deployment minReplicas: 2 maxReplicas: 10 metrics: - type: Resource resource: name: cpu targetAverageUtilization: 10内网压测

[root@k8s-80 k8syaml]# kubectl delete -f cname.yaml[root@k8s-80 k8syaml]# kubectl delete -f f-tcpsocket.yaml[root@k8s-80 k8syaml]# kubectl get po[root@k8s-80 k8syaml]# kubectl get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes ClusterIP 10.96.0.1 <none> 443/TCP 19hnginx ClusterIP 10.98.161.97 <none> 80/TCP 4m15s[root@k8s-80 k8syaml]# curl 10.98.161.97 # 会有页面源码返回[root@k8s-80 k8syaml]# yum install -y httpd-tools[root@k8s-80 k8syaml]# ab -c 1000 -n 200000 http://10.98.161.97/# 另开一个终端动态观看[root@k8s-80 k8syaml]# watch "kubectl get po"或者[root@k8s-80 k8syaml]# kubectl get pods -w

导出配置成yaml

[root@k8s-80 k8syaml]# kubectl get po -n kube-systemNAME READY STATUS RESTARTS AGEcoredns-78fcd69978-d8cv5 1/1 Running 4 (132m ago) 10hcoredns-78fcd69978-qp7f6 1/1 Running 4 (132m ago) 10hetcd-k8s-80 1/1 Running 4 (132m ago) 10hkube-apiserver-k8s-80 1/1 Running 4 (132m ago) 10hkube-controller-manager-k8s-80 1/1 Running 4 (132m ago) 10hkube-flannel-ds-88kmk 1/1 Running 4 (132m ago) 10hkube-flannel-ds-wfvst 1/1 Running 4 (132m ago) 10hkube-flannel-ds-wq2vz 1/1 Running 4 (132m ago) 10hkube-proxy-6t72l 1/1 Running 4 (132m ago) 10hkube-proxy-84vzc 1/1 Running 4 (132m ago) 10hkube-proxy-dllpx 1/1 Running 4 (132m ago) 10hkube-scheduler-k8s-80 1/1 Running 4 (132m ago) 10hmetrics-server-6d54b97f-qwcqg 1/1 Running 2 (132m ago) 8h[root@k8s-80 k8syaml]# kubectl get deployment -o yaml >> test.yaml[root@k8s-80 k8syaml]# kubectl get svc -o yaml >> test.yaml[root@k8s-80 k8syaml]# kubectl get ing -o yaml >> test.yaml创建StatefulSets

StatefulSet是为了解决有状态服务的问题(对应Deployments和ReplicaSets是为无状态服务而设计),其应用场景包括

- 稳定的持久化存储,即Pod重新调度后还是能访问到相同的持久化数据,基于PVC来实现

- 稳定的网络标志,即Pod重新调度后其PodName和HostName不变,基于Headless Service(即没有Cluster IP的Service)来实现

- 有序部署,有序扩展,即Pod是有顺序的,在部署或者扩展的时候要依据定义的顺序依次依次进行(即从0到N-1,在下一个Pod运行之前所有之前的Pod必须都是Running和Ready状态),基于init containers来实现

- 有序收缩,有序删除(即从N-1到0)

从上面的应用场景可以发现,StatefulSet由以下几个部分组成:

- 用于定义网络标志(DNS domain)的Headless Service

- 用于创建PersistentVolumes的volumeClaimTemplates

- 定义具体应用的StatefulSet

[root@k8s-80 k8syaml]# cp nginx.yaml mysql.yaml[root@k8s-80 k8syaml]# vim mysql.yamlapiVersion: v1kind: Servicemetadata: name: mysqlspec: type: NodePort selector: app: mysql ports: - name: http protocol: TCP port: 3306 targetPort: 3306 nodePort: 30005---apiVersion: apps/v1kind: StatefulSetmetadata: name: mysql labels: app: mysqlspec: serviceName: "mysql" replicas: 1 selector: matchLabels: app: mysql template: metadata: labels: app: mysql spec: containers: - name: mysql image: mysql:5.7 env: - name: MYSQL_ROOT_PASSWORD value: "123456"[root@k8s-80 k8syaml]# kubectl apply -f mysql.yaml[root@k8s-80 k8syaml]# kubectl get po[root@k8s-80 k8syaml]# kubectl describe po mysql-0[root@k8s-80 k8syaml]# kubectl get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes ClusterIP 10.96.0.1 <none> 443/TCP 43hmysql ClusterIP 10.103.12.104 <none> 3306/TCP 60s项目打包进k8s

模拟k8s的cicd;使用wordpress

1.编写Dockerfile

# 上传wordpress-5.9.2-zh_CN.tar.gz到/k8syaml下[root@k8s-80 k8syaml]# unzip wordpress-5.9.2-zh_CN.tar.gz[root@k8s-80 k8syaml]# vim DockerfileFROM registry.cn-shenzhen.aliyuncs.com/adif0028/nginx_php:74v3COPY wordpress /usr/local/nginx/html[root@k8s-80 k8syaml]# docker build -t wordpress:v1.0 .2.推送上仓库(这里选择阿里云)

# 登陆docker login --username=lzzdd123 registry.cn-guangzhou.aliyuncs.com# 打标签# docker tag [ImageId] registry.cn-guangzhou.aliyuncs.com/uplooking-class2/lnmp-wordpress:[镜像版本号][root@k8s-80 k8syaml]# docker images | grep wordpress[root@k8s-80 k8syaml]# docker tag 9e0b6b120f79 registry.cn-guangzhou.aliyuncs.com/uplooking-class2/lnmp-wordpress:# 推送[root@k8s-80 k8syaml]# docker push registry.cn-guangzhou.aliyuncs.com/uplooking-class2/lnmp-wordpress:[镜像版本号]3.修改nginx.yaml

更换成nginx镜像,更换服务名等;需要注意ingressClassName必须设置成nginx

apiVersion: apps/v1kind: Deploymentmetadata: name: web labels: app: webspec: replicas: 1 selector: matchLabels: app: web template: metadata: labels: app: web spec: containers: - name: web image: registry.cn-guangzhou.aliyuncs.com/uplooking-class2/lnmp-wordpress:v1.0 livenessProbe: exec: command: - /bin/sh - -c - nginx -t initialDelaySeconds: 5 timeoutSeconds: 1 readinessProbe: tcpSocket: port: 80 ports: - containerPort: 80 resources: limits: cpu: 200m memory: 200Mi requests: cpu: 200m memory: 200Mi---apiVersion: autoscaling/v2beta1apiVersion: autoscaling/v1kind: HorizontalPodAutoscalermetadata: name: webspec: scaleTargetRef: apiVersion: apps/v1 name: web kind: Deployment minReplicas: 1 maxReplicas: 10 metrics: - type: Resource resource: name: cpu targetAverageUtilization: 100---apiVersion: v1kind: Servicemetadata: name: webspec: type: NodePort selector: app: web ports: - name: http protocol: TCP port: 80 targetPort: 80 nodePort: 30005#---#apiVersion: networking.k8s.io/v1#kind: Ingress#metadata:# name: web# annotations:# nginx.ingress.kubernetes.io/rewrite-target: /#spec:# ingressClassName: nginx# rules:# - host: www.lin.com# http:# paths:# - path: /# pathType: Prefix# backend:# service:# name: web# port:# number: 804.检查ing

检查ing防止与新修改的nginx.yaml文件配置冲突,也就是kind: Ingress metadata下的

name: web不能与已有的相同

[root@k8s-80 k8syaml]# kubectl get ing5.创建并运行

[root@k8s-80 k8syaml]# kubectl apply -f nginx.yaml[root@k8s-80 k8syaml]# kubectl get poNAME READY STATUS RESTARTS AGEmysql-0 1/1 Running 2 (3m47s ago) 3h34mweb-69f7d585c9-5tjz8 1/1 Running 1 (3m39s ago) 5m50s6.进入容器修改

[root@k8s-80 k8syaml]# kubectl exec -it web-69f7d585c9-5tjz8 -- bash[root@web-69f7d585c9-5tjz8 /]# cd /usr/local/nginx/conf/ [root@web-69f7d585c9-5tjz8 conf]# vi nginx.conf# 改:root /usr/local/nginx/html;# 为:root /data[root@web-69f7d585c9-5tjz8 conf]# nginx -s reload[root@web-69f7d585c9-5tjz8 conf]# vi /usr/local/php/etc/php.ini# 改:;session.save_handler = files ;session.save_path = "/tmp" # 为:session.save_handler = files session.save_path = "/tmp" [root@web-69f7d585c9-5tjz8 conf]# /etc/init.d/php-fpm restart7.浏览器操作

[root@k8s-80 k8syaml]# kubectl get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d1hmysql ClusterIP 10.103.12.104 <none> 3306/TCP 5h40mweb ClusterIP 10.99.30.175 <none> 80/TCP 131m

节点选择器

[root@k8s-80 k8syaml]# kubectl get noNAME STATUS ROLES AGE VERSIONk8s-80 Ready control-plane,master 2d2h v1.22.3k8s-81 Ready bus,php 2d2h v1.22.3k8s-82 Ready bus,go 2d2h v1.22.3[root@k8s-80 k8syaml]# kubectl get po -o wide # 可以看到服务都落在k8s-81NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESmysql-0 1/1 Running 2 (3h14m ago) 6h45m 10.244.1.155 k8s-81 <none> <none>web-69f7d585c9-5tjz8 1/1 Running 1 (3h14m ago) 3h16m 10.244.1.157 k8s-81 <none> <none>[root@k8s-80 k8syaml]#定向调度

# 修改nginx.yaml,在containers:容器属性以上写该段,与containers同级# 其中node-role.kubernetes.io/php: "true"这个可以通过kubectl describe no k8s-81来看到spec: nodeSelector: node-role.kubernetes.io/go: "true" containers: # 重新部署一下[root@k8s-80 k8syaml]# kubectl apply -f nginx.yaml# 验证[root@k8s-80 k8syaml]# kubectl get poNAME READY STATUS RESTARTS AGEmysql-0 1/1 Running 2 (3h25m ago) 6h56mweb-7469846f96-77j2g 1/1 Running 0 76s[root@k8s-80 k8syaml]# kubectl get po -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESmysql-0 1/1 Running 2 (3h25m ago) 6h56m 10.244.1.155 k8s-81 <none> <none>web-7469846f96-77j2g 1/1 Running 0 78s 10.244.2.108 k8s-82 <none>多节点分散调度

这里使用的是硬亲和(硬约束)

# 修改nginx.yaml,在containers:容器属性以上写该段;并且修改标签# 副本集与资源限制最小副本集一致改为2,后面说更改副本集都是两处都改spec: affinity: podAntiAffinity: requiredDuringSchedulingIgnoredDuringExecution: - labelSelector: matchExpressions: - key : app operator: In values: - web topologyKey: kubernetes.io/hostname nodeSelector: node-role.kubernetes.io/bus: "true"[root@k8s-80 k8syaml]# kubectl apply -f nginx.yaml[root@k8s-80 k8syaml]# kubectl get poNAME READY STATUS RESTARTS AGEmysql-0 1/1 Running 2 (3h46m ago) 7h16mweb-7469846f96-77j2g 1/1 Running 0 21mweb-7469846f96-vqxkq 1/1 Running 0 4m2sweb-7f94dd4894-f7glb 0/1 Pending 0 4m2s[root@k8s-80 k8syaml]# kubectl get po -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESmysql-0 1/1 Running 2 (3h46m ago) 7h17m 10.244.1.155 k8s-81 <none> <none>web-7469846f96-77j2g 1/1 Running 0 22m 10.244.2.108 k8s-82 <none> <none>web-7469846f96-vqxkq 1/1 Running 0 4m49s 10.244.2.109 k8s-82 <none> <none>web-7f94dd4894-f7glb 0/1 Pending 0 4m49s <none> <none> <none> <none># 会发现有一个处于Pending状态,而且无法调度;因为两个节点上都有资源,已经存在的情况下是调度不上去的,会自动判断是否存在# 先把副本集改为1部署,然后会有一个变成Terminating状态无法删除也无法调度,需要删除一个让机制实现;副本集再改2# 删除后[root@k8s-80 k8syaml]# kubectl get po -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESmysql-0 1/1 Running 2 (3h56m ago) 7h27m 10.244.1.155 k8s-81 <none> <none>web-7f94dd4894-jmt5p 1/1 Running 0 82s 10.244.2.110 k8s-82 <none> <none>遇到自动添加污点

#使用这条命令查看或者kubectl get no -o yaml | grep taint -A 5[root@k8s-80 k8syaml]# kubectl describe node |grep Taint Taints: node-role.kubernetes.io/master:NoScheduleTaints: <none>Taints: <none>[root@k8s-80 k8syaml]# kubectl describe node k8s-81 |grep TaintTaints: <none>[root@k8s-80 k8syaml]# kubectl describe node k8s-80 |grep Taint # 得出80被打上污点Taints: node-role.kubernetes.io/master:NoSchedule解决方法

#去除污点NoSchedule,最后一个"-"代表删除[root@k8s-80 k8syaml]# kubectl taint nodes k8s-80 node-role.kubernetes.io/master:NoSchedule-node/k8s-80 untainted# 验证[root@k8s-80 k8syaml]# kubectl describe node |grep TaintTaints: <none>Taints: <none>Taints: <none>硬亲和调度效果

root@k8s-80 k8syaml]# kubectl get po -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESmysql-0 1/1 Running 2 (4h28m ago) 7h59m 10.244.1.155 k8s-81 <none> <none>web-c94cbcc58-6q9d9 1/1 Running 0 4s 10.244.2.112 k8s-82 <none> <none>web-c94cbcc58-nc449 1/1 Running 0 4s 10.244.1.159 k8s-81 <none> <none>数据持久化

Pod 是由容器组成的,而容器宕机或停止之后,数据就随之丢了,那么这也就意味着我们在做 Kubernetes 集群的时候就不得不考虑存储的问题,而存储卷就是为了 Pod 保存数据而生的。存储卷的类型有很多, 我们常用到一般有四种:emptyDir,hostPath,NFS 以及云存储(ceph, glasterfs…)等

emptyDir

emptyDir 类型的 volume 在 pod 分配到 node 上时被创建,kubernetes 会在 node 上自动分配 一个目录,因 此无需指定宿主机 node 上对应的目录文件。这个目录的初始内容为空,当 Pod 从 node 上移除时,emptyDir 中 的数据会被永久删除。emptyDir Volume 主要用于某些应用程序无需永久保存的临时目录。

apiVersion: v1kind: Podmetadata: name: test-pdspec: containers: - image: registry.k8s.io/test-webserver name: test-container volumeMounts: - mountPath: /cache name: cache-volume volumes: - name: cache-volume emptyDir: sizeLimit: 500MihostPath (相当于docker的挂载)

hostPath 类型则是映射 node 文件系统中的文件或者目录到 pod 里。在使用 hostPath 类型的存储卷时,也可 以设置 type 字段,支持的类型有文件、目录、File、Socket、CharDevice 和 BlockDevice。

[root@k8s-80 k8syaml]# kubectl delete -f mysql.yaml# 修改mysql.yaml文件,在containers下添加(volumeMounts与env同级,volumes与containers同级) volumeMounts: - name: data mountPath: /var/lib/mysql volumes: - name: data hostPath: path: /data/mysqldata type: DirectoryOrCreate[root@k8s-80 k8syaml]# kubectl apply -f mysql.yaml# 查看落在哪个节点上[root@k8s-80 k8syaml]# kubectl get po -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESmysql-0 1/1 Running 0 28s 10.244.1.160 k8s-81 <none> <none>web-c94cbcc58-6q9d9 1/1 Running 0 29m 10.244.2.112 k8s-82 <none> <none>web-c94cbcc58-nc449 1/1 Running 0 29m 10.244.1.159 k8s-81 <none> <none># 在k8s-81上验证是否创建文件[root@k8s-81 ~]# ls /data/mysqldataNfs挂载

nfs 使得我们可以挂载已经存在的共享到我们的 Pod 中,和 emptyDir 不同的是,当 Pod 被删除时,emptyDir 也会被删除。但是 nfs 不会被删除,仅仅是解除挂在状态而已,这就意味着 NFS 能够允许我们提前对数据进行处 理,而且这些数据可以在 Pod 之间相互传递,并且 nfs 可以同时被多个 pod 挂在并进行读写。

| 主机名 | IP | 备注 |

|---|---|---|

| nfs | 192.168.188.17 | nfs |

-

在所有节点上安装 nfs

yum install nfs-utils.x86_64 rpcbind -ysystemctl start rpcbind nfs-utils.servicesystemctl enable nfs-utils rpcbind -

配置 nfs

[root@nfs ~]# mkdir -p /data/v{1..5}[root@nfs ~]# cat > /etc/exports <<EOF/data/v1 192.168.245.*(rw,no_root_squash)/data/v2 192.168.245.*(rw,no_root_squash)/data/v3 192.168.245.*(rw,no_root_squash)/data/v4 192.168.245.*(rw,no_root_squash)/data/v5 192.168.245.*(rw,no_root_squash)EOF[root@nfs ~]# exportfs -arvexporting 192.168.245.*:/data/v5exporting 192.168.245.*:/data/v4exporting 192.168.245.*:/data/v3exporting 192.168.245.*:/data/v2exporting 192.168.245.*:/data/v1[root@nfs ~]# showmount -eExport list for nfs:/data/v5 192.168.245.*/data/v4 192.168.245.*/data/v3 192.168.245.*/data/v2 192.168.245.*/data/v1 192.168.245.* -

创建 POD 使用 Nfs

# 修改nginx.yaml文件,副本集改为1,增加volumeMounts、volumes- containerPort: 80 volumeMounts: - mountPath: /usr/local/nginx/html/ name: nfs volumes: - name: nfs nfs: path: /data/v1 server: 192.168.188.17[root@k8s-80 k8syaml]# kubectl apply -f nginx.yaml[root@k8s-80 k8syaml]# kubectl get poNAME READY STATUS RESTARTS AGEmysql-0 1/1 Running 0 52mweb-5d689d77d6-zwfnq 1/1 Running 0 93s# 可以看到挂载信息[root@k8s-80 k8syaml]# kubectl describe po web-5d689d77d6-zwfnq# 在nfs服务器上操作[root@nfs ~]# echo "this is 17" > /data/v1/index.html# 浏览器打开http://192.168.245.210:30005/

PV 和 PVC

PersistentVolume(PV)是集群中已由管理员配置的一段网络存储。 集群中的资源就像一个节点是一个集群资源。 PV 是诸如卷之类的卷插件,但是具有独立于使用 PV 的任何单个 pod 的生命周期。 该 API 对象捕获存储 的实现细节,即 NFS,iSCSI 或云提供商特定的存储系统(ceph)。 PersistentVolumeClaim(PVC)是用户存储的请求。PVC 的使用逻辑:在 pod 中定义一个存储卷(该存储卷类 型为 PVC),定义的时候直接指定大小,pvc 必须与对应的 pv 建立关系,pvc 会根据定义去 pv 申请,而 pv 是由 存储空间创建出来的。pv 和 pvc 是 kubernetes 抽象出来的一种存储资源。

PV 的访问模式(accessModes)

| 模式 | 解释 |

|---|---|

| ReadWriteOnce(RWO) | 可读可写,但只支持被单个节点挂载 |

| ReadOnlyMany(ROX) | 只读,可以被多个节点挂载 |

| ReadWriteMany(RWX) | 多路可读可写。这种存储可以以读写的方式被多个节点共享。不是每一种存储都支 持这三种方式,像共享方式,目前支持的还比较少,比较常用的是 NFS。在 PVC 绑 定 PV 时通常根据两个条件来绑定,一个是存储的大小,另一个就是访问模式 |

PV 的回收策略(persistentVolumeReclaimPolicy)

| 策略 | 解释 |

|---|---|

| Retain | 不清理, 保留 Volume(需要手动清理) |

| Recycle | 删除数据,即 rm -rf /thevolume/*(只有 NFS 和 HostPath 支持) |

| Delete | 删除存储资源,比如删除 AWS EBS 卷(只有 AWS EBS, GCE PD, Azure Disk |

PV 的状态

| 状态 | 解释 |

|---|---|

| Available | 可用 |

| Bound | 已经分配给 PVC |

| Released | PVC 解绑但还未执行回收策略 |

| Failed | 发生错误 |

创建PV

[root@k8s-80 k8syaml]# vim pv.yamlapiVersion: v1kind: PersistentVolumemetadata: name: pv001 labels: app: pv001spec: nfs: path: /data/v2 server: 192.168.188.17 accessModes: - "ReadWriteMany" - "ReadWriteOnce" capacity: storage: 5Gi---apiVersion: v1kind: PersistentVolumemetadata: name: pv002 labels: app: pv002spec: nfs: path: /data/v3 server: 192.168.188.17 accessModes: - "ReadWriteMany" - "ReadWriteOnce" capacity: storage: 10Gi---apiVersion: v1kind: PersistentVolumemetadata: name: pv003 labels: app: pv003spec: nfs: path: /data/v4 server: 192.168.188.17 accessModes: - "ReadWriteMany" - "ReadWriteOnce" capacity: storage: 15Gi---apiVersion: v1kind: PersistentVolumemetadata: name: pv004 labels: app: pv004spec: nfs: path: /data/v5 server: 192.168.188.17 accessModes: - "ReadWriteMany" - "ReadWriteOnce" capacity: storage: 20Gi[root@k8s-80 k8syaml]# kubectl apply -f pv.yaml persistentvolume/pv001 createdpersistentvolume/pv002 createdpersistentvolume/pv003 createdpersistentvolume/pv004 created查看PV

# 查看PV[root@k8s-80 k8syaml]# kubectl get pvNAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGEpv001 5Gi RWO,RWX Retain Available 26spv002 10Gi RWO,RWX Retain Available 26spv003 15Gi RWO,RWX Retain Available 26spv004 20Gi RWO,RWX Retain Available 26s使用PVC

[root@k8s-80 k8syaml]# vim pvc.yamlapiVersion: v1kind: PersistentVolumeClaimmetadata: name: pvcspec: accessModes: - "ReadWriteMany" resources: requests: storage: "12Gi"[root@k8s-80 k8syaml]# kubectl apply -f pvc.yaml persistentvolumeclaim/pvc created# 查看[root@k8s-80 k8syaml]# kubectl get pvcNAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGEpvc Bound pv003 15Gi RWO,RWX 21s# 修改nginx.yamlresources: limits: cpu: 200m memory: 200Mi requests: cpu: 200m memory: 200Mi volumeMounts: - mountPath: /usr/local/nginx/html/ name: shy volumes: - name: shy persistentVolumeClaim: claimName: pvc[root@k8s-80 k8syaml]# kubectl apply -f nginx.yaml# 查看与验证[root@k8s-80 k8syaml]# kubectl get poNAME READY STATUS RESTARTS AGEmysql-0 1/1 Running 0 102mweb-5c49d99965-qsf7r 1/1 Running 0 41s[root@k8s-80 k8syaml]# kubectl describe po web-5c49d99965-qsf7r[root@k8s-80 k8syaml]# kubectl get pv # 已经绑定成功NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGEpv001 5Gi RWO,RWX Retain Available 38mpv002 10Gi RWO,RWX Retain Available 38mpv003 15Gi RWO,RWX Retain Bound default/pvc 38mpv004 20Gi RWO,RWX Retain Available 38m# nfs服务器[root@nfs ~]# echo "iPhone 14 Pro Max" > /data/v4/index.html# 浏览器验证StorageClass

注:适用于大公司集群

在一个大规模的Kubernetes集群里,可能有成千上万个 PVC,这就意味着运维人员必须实现创建出这个多个PV, 此外,随着项目的需要,会有新的 PVC 不断被提交,那么运维人员就需要不断的添加新的,满足要求的 PV,否则新的 Pod 就会因为 PVC 绑定不到 PV 而导致创建失败。而且通过 PVC 请求到一定的存储空间也很有可能不足以满足应 用对于存储设备的各种需求,而且不同的应用程序对于存储性能的要求可能也不尽相同,比如读写速度、并发性 能等,为了解决这一问题,Kubernetes 又为我们引入了一个新的资源对象:StorageClass,通过 StorageClass 的 定义,管理员可以将存储资源定义为某种类型的资源,比如快速存储、慢速存储等,kubernetes 根据 StorageClass 的描述就可以非常直观的知道各种存储资源的具体特性了,这样就可以根据应用的特性去申请合适的存储资源 了。

configmap

在生产环境中经常会遇到需要修改配置文件的情况,传统的修改方式不仅会影响到服务的正常运行,而且操 作步骤也很繁琐。为了解决这个问题,kubernetes 项目从 1.2 版本引入了 ConfigMap 功能,用于将应用的配置信 息与程序的分离。这种方式不仅可以实现应用程序被的复用,而且还可以通过不同的配置实现更灵活的功能。在 创建容器时,用户可以将应用程序打包为容器镜像后,通过环境变量或者外接挂载文件的方式进行配置注入。 ConfigMap && Secret 是 K8S 中的针对应用的配置中心,它有效的解决了应用挂载的问题,并且支持加密以及热 更新等功能,可以说是一个 k8s 提供的一件非常好用的功能。

一个重要的需求就是应用的配置管理、敏感信息的存储和使用(如:密码、Token 等)、容器运行资源的配置、安全管控、身份认证等等。

对于应用的可变配置在 Kubernetes 中是通过一个 ConfigMap 资源对象来实现的,我们知道许多应用经常会有从配置文件、命令行参数或者环境变量中读取一些配置信息的需求,这些配置信息我们肯定不会直接写死到应用程序中去的,比如你一个应用连接一个 mysql 服务,下一次想更换一个了的,还得重新去修改代码,重新制作一个镜像,这肯定是不可取的,而**ConfigMap 就给我们提供了向容器中注入配置信息的能力,不仅可以用来保存单个属性,还可以用来保存整个配置文件,比如我们可以用来配置一个 mysql 服务的访问地址,也可以用来保存整个 mysql 的配置文件。**接下来我们就来了解下 ConfigMap 这种资源对象的使用方法。